A few years back, who would have thought technology would grow smart enough to operate on the tips of fingers? With the introduction of diffusion models, the generation of new data became easier. So, if you wish to learn video generation from text, image, and both inputs, stay tuned!

Xaoxin Chen, along with genius minds from Tencent AI Lab and other universities in China, collaborated to propose the two diffusion models for video generation; each model has distinct characteristics from the other. Starting with VideoCrafter1: Text-to-Video (T2V), this model takes text as input and, based on that, generates a video.VideoCrafter1: Image-to-Video (I2V) takes an image, text, or both as input and generates a video.

Until today, many models have been created and failed due to a lack of functionality. VideoCrafter1 aimed to produce the best video-generating models with high-quality results. The I2V model proposed by VideoCrafter1 is the first open-source generic model that preserves the originality of the reference image. Both models are pre-trained on a large dataset of images and videos, this enables them to get a better understanding of the prompt instructions and generate high-quality cinematic videos while preserving the structure and content of the input image while generating results.

With every new wave of trends, AI and computer vision are contributing much to the growth and improvement of technology. Who would have thought to use text and images as inputs to generate video? Despite the endless models presented, every single one had a failing point. VideoCrafter1 is proposed while addressing the inefficiency of previous work. So, without any further due, let’s unravel the new world of VideoCrafter1.

The Starting of Video Generation

With text prompts unlocking the new dimensions of text results and image generation, why not use it for video generation? Many models stepped up to pay their contribution in this regard; some were not open-sourced, and others, like Gen-2, Pika Labs, and Moonvalley, were inaccessible for further exploration. ModelScope had unsatisfactory results. Zeroscope V2 XL had better visuals, but noise and flickering were visible in the video.

Similar issues were encountered during the I2V generation. Models proposed by Pika Labs and Gen-2 had a limited capability; ModelScope could not preserve the original input content. So far, it was impossible to judge the better version.

Rise of VideoCrafter1

Earlier video-generating tools struggled to deliver efficient results; they either were not open-source, had noise in the video, or could not capture the original content of the reference input. VideoCrafter1 addressed these inefficiencies by producing realistic and cinematic-quality videos with a high resolution of 1024×576 and 2 2-second duration. Its image-to-video is the first open-sourced generic I2V model that preserves the content and structure of a reference image while generating the video.

The T2V model operates on the SD 2.1 strategy and is pre-trained on images and video. By using the LAION COCO 600M, Webvid10M, and a 10M high-resolution video dataset, T2V can generate high-quality cinematic short videos. Whereas I2V uses both text and image as input, the additional catch in this model is the use of CLIP, which preserves the structure and content of the input image while generating results. I2V is trained on LAION COCO 600M and We-bvid10M.

But how was VideoCrafter1 different from the others? VideoCrafter1 spent excessive time pre-training the models with 20 million videos and 600 million pictures while using advanced techniques to capture the content.

From academic research to content creation and animation, VideoCrafter1 has proven to be a valuable asset. Its versatile result has made it easy to generate multimedia content. Storytellers can bring their ideas to life. The image-to-video transformation proves helpful in preserving the structure and content of the reference image.

Get VideoCrafter1 today and Connect with the Future Today!

It’s never too late to upgrade your life with the latest outbreak trend. Upgrade your business today with the brilliant AI tool; get the free code from Git Hub. But first, find out more about VideoCrafter1.

Working Methodology of VideoCrafter1

VideoCrafter1 focuses on producing high-quality videos using two fundamental components: (I) Video Variational Autoencoder (VAE) and (ii) video latent diffusion model. VAE is responsible for reducing the dimension of video data by compressing the video representation, known as ‘video latent.’ VideoCrafter1 refines its generated content after running through a series of diffusion processes, each process includes noisy video latents. Researchers implemented 3D U-Net architecture to produce a clean transitioned output by removing the noise from input noisy latent. It further incorporated semantic control and motion speed control.

Let’s explore the framework of VideoCrafter1. It begins with training the video in the latent space where motion speed picks up the frames to generate video. The T2V model feeds text prompts to the spatial transformer via a cross-attention. Whereas the I2V model uses both image and text prompts as input.

After the models were complete, many experiments were done to validate their capabilities. The T2V was tested thoroughly through text prompts, and the I2V went through a series of image and text prompt tests to observe content preservation. The experiments revealed that the implemented denoising techniques enhanced the overall quality of the result, whereas semantic and motion speed control preserved the all-over details.

While choosing the dataset, remember that it is a source of training the models to handle different input types and deliver high-quality videos. VideoCrafter1 uses LAION COCO (containing 600 million high-quality image captions) for the T2V model and WebVid-10M (large-scale dataset of short videos) for the I2V model. An additional dataset containing 10 million videos is also given to the model to improve its training process. Combined, these datasets provide a rich textual and visual output, enabling VideoCrafter1 to produce high-quality results on different prompts.

Performance Evaluation

To test VideoCrafter1’s performance using EvalCrafter, a standard tool to evaluate the performance. This tool compared VideoCrafter1 with Gen-2, Pika Labs, and ModelScope. The following table and figure show the results of the evaluation.

VideoCrafter1 was observed to secure the highest visual quality compared to others. To gain a better understanding of the results, let’s take a look at the qualitative evaluation and recheck the derived results.

.webp)

.webp)

Conclusion

The two open-sourced video-generating diffusion models of VideoCrafter1 have revolutionized the realm of AI. Both models have shown their remarkable capabilities in multimedia content creation. By addressing the inefficiencies of previous frameworks, the T2V and I2V models focus on producing high-resolution videos with content and structure preservation of reference images. This has indeed opened the gateways of endless exciting possibilities.

By increasing the duration of extended videos, developers and researchers can benefit from the virtual environment, storytelling, and high-fidelity simulations. Another important aspect to note here is that the collaboration with ScaleCrafter has enhanced the visual realism and quality of generated content. With the continued integration of high-quality data, a more sophisticated and nuanced video generation is promised.

VideoCrafter1 models inherit the capability of revolutionizing different industry sectors, from entertainment and marketing to research and education. When it comes to content creators they can use these models to create compelling videos, whereas researchers can augment and stimulate data with them. It can be concluded that their use extends from virtual to augmented reality and beyond while amplifying the interactive experience for users.

The future holds endless opportunities for these models, and their application has unlocked achievements in many sectors. Technology is for those who look forward to the evolving AI landscape and embrace the future.

Reference:

Similar Posts

-

UniRef++: A Unified Object Segmentation Model

-

Monkey: A model for text generation from high-resolution image

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

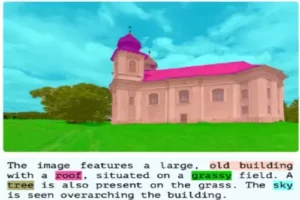

GLaMM: Text Generation from Image through Pixel Grounding Large Multimodal Model

-

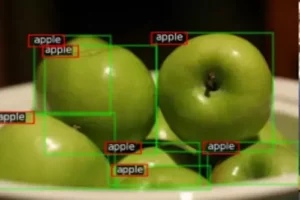

RegionSpot: Identify and validate the object from images

-

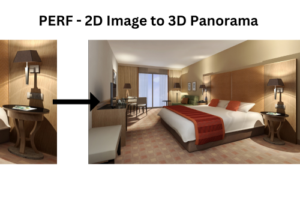

PERF: Nanyang Technological University Presents a Revolutionary Approach to 3D Experiences with Single Panoramas