Feel the excitement of elimination of barriers in Video Object Segmentation with Cutie. This game-changing approach isn’t just about technology it’s about forcing the boundaries of what can be achieved. Consider a world where memory-based video classification grows and is accurate, making difficult scenarios easy. Ho Kei Cheng, Seoung Wug Oh, Brian Price, Joon-Young Lee, and Alexander Schwing from the University of Illinois Urbana-Champaign and Adobe Research are involved in this model research.

Cutie is a video object segmentation system. Cutie has the goal to improve the precision of video object segmentation by a novel approach known as object-level memory reading. Cutie uses a top-down approach for object segmentation, but many other modern methods do that with pixel-level memory reading. It includes a query-based object transformer in order to interact with pixel-level information using a small number of object queries.

These object searches give an overview at a glance of the target item and also maintain high-resolution map features for precision segmentation. Cutie also uses foreground-background masked attention to separate the meaning of the object from the background.

This model beats XMem on the MOSE dataset which is a difficult dataset, raising the Jaccard Index score by 8.7 points. It also outperforms DeAOT by 4.2 points and runs three times faster. Cutie is the answer to more precise and effective video object segmentation.

Prior Related work and its limitations

Memory-based Video Object Segmentation (VOS) techniques store previous video frame information to segment future frames. Some approaches include fine-tuning the first frame of inference, which can be time-consuming. If objects are hidden repeated approaches are slower. Prior techniques use pixel-level matching of features and integration, although they may still have issues with low-level pixel-matching noise. Cutie uses some XMem memory reading techniques, it also includes object-level reading, which improves speed in difficult scenarios.

For pixel matching with memory in VOS, transformer-based approaches have been developed. These approaches calculate focus between spatial feature maps, which can be costly. Some approaches for sparse attention may not function as well. AOT Associating objects with transformers techniques process many objects in one pass using an identity bank, but they have limits in object identification and scalability. Concurrent techniques use vision transformers, but they need large-scale pretraining and have slower inference speeds. It is intended to eliminate expensive attention between spatial feature maps allowing for real-time processing.

Initial VOS algorithms that attempt object-level reasoning gathered object attributes by recovering their identity but performed poorly on common benchmarks. Object-level variables provided flexibility but fell lacking in terms of segmentation accuracy. Cutie, while motivated to ISVOS. Cutie learns object-level knowledge without the requirement for instance segmentation pretraining. It also allows for bidirectional communication between pixel-level and object-level. It is a one-stage approach that is faster than ISVOS and gives open-source code encouraging reproducibility and development.

Introduction about Cutie

There is an enormous problem in the area of Video Object Segmentation (VOS), like robotics, video editing, etc. Present techniques try to track and segment objects based on labels from the first video frame, particularly in the “semi-supervised” surroundings. These VOS approaches have included robotics, video editing, annotation cost reduction, and even merging with Segment Anything Models (SAMs) for universal video segmentation.

They add object-level memory reading, that returns an object from memory to the query frame. They use an object transformer with recent advances in query-based object detection and segmentation. This transformer refines a feature map and encodes object-level information using a small range of object queries, this also maintains a balance among high-level object representation and high-resolution features.

A sequence of attention layers, including their new foreground-background masked attention, facilitates bidirectional communication by both global feature interaction and clean separation of foreground and background semantics. They develop a compact object memory to a summary of target object properties and support efficient, long-term object representations.

.webp)

This strategy, named Cutie, appears to be greatly more robust in difficult settings, outperforming previous algorithms by 8.7 points on the MOSE dataset. It remains successful in typical datasets like DAVIS and YouTubeVOS, with a balance of accuracy and efficiency.

Cutie, which has been fitted with an object transformer, executes object-level memory reading, boosting the adaptability of VOS settings. They widen masked attention to include foreground and background, providing visual details and a contrast between the target object and distractions. For offering long-term object features, they develop a compact object memory as target-specific object-level descriptions during querying.

The Future Potential of Cutie

Cutie and VOS have interesting future directions. Expanding this model’s capabilities to handle complicated, real-world settings like outdoor environments, changeable lighting conditions, and a wide range of item kinds. Additional improvements in terms of speed and computing efficiency can improve its suitability in real-time applications. The addition of advanced machine learning techniques allows it to adapt and improve over time, by removing the need for lengthy human comments. Applications other than video editing and robotics, such as self-driving cars and augmented reality, may open up fresh possibilities for this technology.

Research Paper and Code Accessibility

The research paper of this study is available on Arxiv. The code of this model is also open source and anyone can access this code on GitHub. These both resources are open source and anyone can use them according to their interests.

Potential Feilds of Cutie

Cutie offers a wide range of possible applications. Cutie’s ability to properly segment and track objects in videos can help speed up the post-production process, allowing editors to easily apply special effects, and generate visually attractive videos. This can minimize the amount of time and effort for video editing, making it an essential instrument for the media and entertainment industries.

Cutie performance in difficult conditions with major occlusions and distractions has consequences for robotics and autonomous systems in general. It can improve robot vision and interaction, to deal with dynamic situations with a high level of object knowledge. Cutie’s robotics applications include safer and more efficient operations across many industries, from autonomous vehicles that can recognize and respond to barriers to improved surveillance systems.

Architecture and Working of Cutie

Cutie is VOS, which offers an alternative viewpoint on video processing. Cutie performs at semi-supervised VOS, where it detects and segments objects. This is useful in robotics, video editing, low-cost data annotation, etc.

This model takes as input the first-frame segmentation of target objects and continues to segment the after frames. Crucial information from previous segments builds high-resolution pixel memory and high-level object memory. Cutie gathers an initial pixel reading out, which can be noisy. Cutie adds object-level semantics to this readout by object converter.

.webp)

From previous segmented (memory) frames, they save pixel memory and object memory. Pixel memory is obtained for the query frame as pixel output, which interacts bidirectionally in the object transformer with object queries and object memory. The object transformer provides object-level semantics to the pixel feature and generates the final object readout for decoding into the output mask.

The object transformer blends object queries with the first output to improve accuracy. Cutie focuses on both the foreground and the background, allowing it can deal with complex settings with interruptions. The transformer was created to keep object queries and pixel features communicating efficiently avoiding costly attention.

Object Transformer is responsible for improving the initial readout. It accepts as inputs the readout and an object memory. Multiple transformer blocks are used to repeatedly update the object queries and readout. This procedure makes the final readout has been modified for correct segmentation.

Object Memory that holds a summary representation of the target item. This memory is utilized to give target-specific characteristics in the object transformer.

Cutie employs foreground-background masked attention, to help with distinguishing the semantics of the foreground from the background. This feature is useful in circumstances like obstructions and distractions. Cutie’s architecture emphasizes efficiency and reliability, making it an effective tool. It separates itself by giving a more detailed, object-level knowledge of the video content.

Experimental results

They use conventional measures like the Jaccard index, contour accuracy, and their average J & F. In YouTubeVOS, they compute J and F individually for the “seen” and “unseen” groups, then average them. They measure Higher Order Tracking Accuracy (HOTA) individually for common and rare object classes in the BURST dataset. This model adjusts inputs to have a shorter edge with a maximum of 480 pixels before adjusting the predictions back to the original resolution.

They begin with data pretraining, generating a reference-based series of three still pictures with synthetic deformation. The main training is on the video datasets DAVIS and YouTubeVOS, with eight frames as recommended in the reference. They can train on MOSE in conjunction with DAVIS and YouTubeVOS. A single model is used across all parameters. The AdamW optimizer is used for optimization using specific parameters. Point monitoring and a variety of loss functions are used to manage losses in order to reduce memory consumption. Memory frames are refreshed on a regular basis and they also use a FIFO technique and top-k filtering for pixel memory.

Main Findings: They compared Cutie to many approaches on a variety of benchmarks which include DAVIS 2017, YouTubeVOS-2019, MOSE, and BURST. By testing algorithms at their full frame rates, they hoped to ensure fair comparisons. Cutie outperforms other approaches while being efficient on the difficult MOSE dataset.

Ablations: Researchers conducted ablation tests to investigate several design options. They put hyperparameters like the number of object transformer blocks, object queries, memory frame intervals, and maximum memory frames to the test. Researchers discovered that their object transformer blocks minimize distracting noise and generate cleaner object masks. Cutie has also been proven to be unaffected by the number of objects.

Bottom-Up vs. Top-Down Feature: They examined a bottom-up-only strategy without the object transformer, a top-down-only approach without the pixel memory, and their solution with both features. Their technique, which combines both qualities outperforms.

Positional Embeddings: They investigated the effect of integrating positional embeddings into the object transformer. The use of positional embeddings for object searches and pixel characteristics improves efficiency.

Masked Attention and Object Memory: Researchers experimented with masked attention configurations and the presence or absence of object memory. Their foreground-background masked attention was more successful than foreground-only. Object memory additionally improved outcomes while adding little complexity.

Cutie outperformed in many benchmarks, showing its capacity in Video Object Segmentation. The ablation investigations assisted them in refining their algorithm by fine-tuning hyperparameters, increasing attention mechanisms, and efficiently exploiting positional embeddings.

Conclusion

Cutie is a network with object-level memory reading for robust video object segmentation in difficult settings. Cutie blends top-down and bottom-up features successfully, delivering breakthrough outcomes in various benchmarks, particularly on the difficult MOSE dataset. They expect that by integrating with segment-anything models, they may bring greater attention to object-centric video segmentation and make universal video segmentation approaches.

References

https://arxiv.org/pdf/2310.12982v1.pdf

https://github.com/hkchengrex/Cutie

Similar Posts

-

UniRef++: A Unified Object Segmentation Model

-

Monkey: A model for text generation from high-resolution image

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

GLaMM: Text Generation from Image through Pixel Grounding Large Multimodal Model

-

RegionSpot: Identify and validate the object from images

-

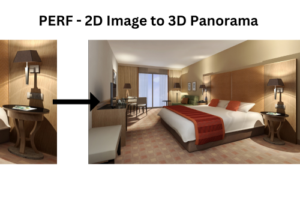

PERF: Nanyang Technological University Presents a Revolutionary Approach to 3D Experiences with Single Panoramas