Unlocking the Human Mind’s Secrets: Consider a world in which your thoughts can be understood and your brain’s hidden language understood. They have taken a major step forward in neurology by making this a reality. Join us on a journey to discover the power of the human mind through simple non-surgical brain recordings. Alexandre Défossez , Charlotte Caucheteux, Jérémy Rapin, Ori Kabeli & Jean-Rémi King from Meta AI, and Inria Saclay, PSL University are involved in this amazing research.

Decoding Speech from brain activity is a major goal in medicine and neuroscience. There have recently been some interesting advancements in devices. They used deep-learning algorithms on brain records to begin working out basic language things like letters, words, and sound patterns. However, making this work with non-surgical brain recording and genuine decoding speech remains extremely difficult.

Using non-surgical brain recordings from a group of healthy adults, they constructed a model that learns from self-taught examples of how people hear speech. They used data from four public datasets and 175 participants to test this model. The participants listened to brief stories and sentences while researchers recorded their brain activity with magneto-encephalography and electro-encephalography equipment.

The results they achieved are quite impressive. From just 3 seconds of brain activity, their algorithm can identify the part of speech that someone is hearing. That is, it can recognize words and sentences that it didn’t see before in its training.

Prior related works and limitations

Previous methods were frequently performed with a limited vocabulary, separating just a small selection of words or sub-lexical components such as the sounds or syllables. As a result, they were limited in their usefulness to a greater range of language material.

Earlier models often lacked zero-shot decoding, which meant they couldn’t recognize words or sentences that weren’t in their training data. This limitation stands in opposition to the existing model’s ability to handle unseen stuff.

Prior models’ performance was frequently low, especially with harmless recordings of brain activity. This made high accuracy in decoding speech or language characteristics difficult, limiting their everyday use.

Many earlier studies did not include open datasets, making it impossible for the scientific community to evaluate and build on their findings. This lack of openness limited the field’s advancement. A number of free datasets failed to make up for the range of experimental methodologies utilized in previous models. This made drawing direct performance comparisons across research difficult.

Because of possible variations in the underlying brain mechanisms involved, comparing the decoding speech with the produced speech should be done with care.

Introduction about Decoding speech from non-surgical brain recordings

Many people lose their capacity to speak each year as a result of brain injuries or disorders. Scientists have been developing a Brain-Computer Interface (BCI) to help these individuals communicate. Previously, BCIs required surgery to implant electrodes in the brain, and some progress was made, such as a patient who could use a BCI to write at a rate of roughly 15-18 words per minute.

These surgical treatments, however, have drawbacks. They needed brain surgery, and it was difficult to maintain the equipment running properly over time. As a result, researchers began investigating non-surgical brain recording methods, such as reading brain activity from outside the head using sensors such as magneto- and electro-encephalography MEG and EEG.

These gadgets are safer, but they generate noisy signals. Scientists are still working on ways to use these signals to let individuals communicate without requiring surgery. They’ve developed a new way that could be a significant step ahead, using one design trained on a large number of people as well as creative approaches to understand how the human brain processes speech.

So they present a convolutional neural network built on a “subject layer” and trained with an opposite objective to predict the representations of the audio waveform learned by wav2vec 2.0 previously trained on 56k hours of speech.

The researcher used M/EEG datasets to validate their approach. These datasets include the brain activities of 175 participants who listen to a sentence of a short story with a sample of 3 seconds. This model matches the audio segment and its accuracy is up to 72.5% which is top-10 accuracy for MEG and up to 19.1% top-10 accuracy for EEG.

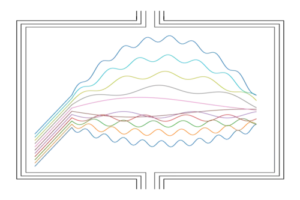

The red color indicates that the M/EEG sensors are related to a higher spatial attention weight on average. The topographies, with the exception of the Brennan dataset, emphasize channels that are generally engaged during auditory stimulation.

Future scope of decoding speech with non-surgical brain recording

The study’s findings pave the way for fascinating future possibilities in the fields of brain-computer interfacing and decoding speech. We could expect the development of stronger and more efficient systems for understanding speech from brain activity as non-surgical brain recording techniques and deep learning architectures develop.

This technology has a lot of potential for helping those with communication difficulties, like those who have lost their ability to speak due to brain traumas or neurodegenerative diseases. Furthermore, further research in this subject may result in enhanced models that can decode not only voice but also higher-level linguistic elements, allowing for more natural and complete communication via brain-computer interfaces.

Research paper and code availability

The detailed research material on this research is available on Arxiv. The whole source code for processing the datasets, training, and assessing the models and approach given here can be found on GitHub. The data and code is available anytime and free for anyone’s use.

Use of this model in different fields

The study’s findings have broad potential use in a variety of fields. The capacity of decoding speech from non-surgical brain recordings has the potential to transform communication for those with speech-related problems, such as those affected by strokes or neurological conditions. This technique may also be useful for psychological studies, allowing for a better understanding of how the brain processes and represents language.

It may also have consequences in assistive technology, allowing for the creation of more advanced and user-friendly brain-computer interfaces. This discovery may have applications not only in healthcare but also in human-computer interaction, education, and other fields in the future, altering the way we communicate and interact with technology.

Is MEG really much better than EEG?

They train their model on a subset of the data that simplifies recording time, the number of sensors, and the number of participants to see if these performances are affected by the overall recording duration and/or the number of recording sensors. To prevent over-limiting the analysis dataset, they removed the Brennan and Hale31 dataset. As a result, they match all datasets to the dataset with the fewest number of channels from the three remaining datasets. They keep only 19 participants per dataset, keeping the smallest number for all three datasets.

For the greatest reliability, all test phases remain in place. Overall, sub-sampling reduces decoding performance, but MEG decoding remains significantly superior to EEG. Although these findings should be verified by presenting identical images to participants recorded with both EEG and MEG, they indicate that the variation in decoding performance reported between investigations is mostly due to device type.

Brain module

They employ a deep neural network in the brain module, which takes raw M/EEG time series data and one-hot encoding that represents the subject (s). The latent brain representation is then generated at the same sample rate as the input data. This architecture includes a spatial attention layer that takes sensor positions into account, a subject-specific 1×1 inversion to account for inter-subject variation, and a stack of neural blocks.

The spatial attention layer divides the data into 270 channels depending on sensor locations and weights the input sensors using learning functions. To identify the relevance of each sensor, they use a softmax attention technique. A spatial dropout is also used to introduce some unpredictability.

They learn a matrix for each subject and apply it after the spatial attention layer to capture inter-subject differences. This step helps to adjust for subject differences. Following that, they employ a five-block stack, each of which contains three convolutional layers. Residual skip connections, batch normalization, and GELU activation are present in the first two layers.

Each block’s third layer employs GLU activation to limit the number of channels. To increase their receptive field, these convolutions dilate at varying speeds. Finally, to match the dimensions of speech representations, they have used two 1×1 convolutions. They also add a 150ms delay to the input brain signal to align it with the appropriate brain responses.

Speech module

The Mel spectrogram, which is inspired by the cochlea, may not fully reflect the different brain representations of decoding speech. To fix this, they used latent speech representations instead of Mel spectrograms. They investigated two methods: “Deep Mel” and “wav2vec 2.0.” The Deep Mel module used a deep convolutional architecture to learn both voice and M/EEG representations at the same time, however, it was less effective than the pre-trained wav2vec 2.0 technique.

Wav2vec 2.0, which has only been trained on audio data, alters raw waveforms with convolutional and transformer blocks to anticipate masked areas of its own latent representations. This pre-trained model has shown a lot of promise in terms of encoding various linguistic properties and mapping them onto brain activations. They used the wav2vec2-large-xlsr-53 model, which had been trained on 56k hours of data.

Evaluation of model

They used multiple models to assess the quality of Mel spectrogram reconstructions. There were two kinds of failures considered: regression loss and CLIP loss, each of which involved a weighted average across test sections based on CLIP loss chances. Their analysis concentrated on the segment- and word-level judgments. The segment-level review determined if the genuine segment was one of the top 10 most likely predictions. They chose 3-second segments for each word in the test set for word-level evaluation, computed the distributions of probability, and determined top-1 and top-10 word-level accuracy.

In addition, they performed a prediction analysis to determine the accuracy of decoder outputs based on specified attributes. To compare models and datasets, they utilized statistical tests such as the Wilcoxon test and the Mann-Whitney test, which took into account participant differences. These analyses helped them understand the decoder’s performance and its link to various variables and datasets.

Conclusion

Overall, their algorithm correctly detects the matching decoding speech segment from 3 seconds of non-surgical brain recordings with up to 41% accuracy out of over 1,000 possible possibilities. This performance, which can approach 80% in the best individuals, enables the decoding speech of perceived words and sentences not included in the training set.

Two significant problems must be addressed in order to decoding speech perception from M/EEG. To begin with, these signals can be quite loud, making it impossible to extract usable information. Second, it is unknown which aspects of speech are actually represented in the brain. This model goes through how our “brain” and “speech” modules approach these two challenges in the context of decoding speech comprehension.

Finally, they compare the performance of this model to earlier research and suggest the steps that must be completed before they have a chance to use this approach for decoding speech in clinical contexts.

References

https://github.com/facebookresearch/brainmagick

https://browse.arxiv.org/pdf/2208.12266.pdf

Similar Posts

-

Qwen-Audio: A unified Multi-Tasking Audio-Language Model

-

Mustango: Text-to-Music Generation Model

-

LLVC: Voice transition with Minimal response time in synchronized manners on Hardware

-

University of Stuttgart Present IMS Toucan Improved System in Blizzard Challenge 2023

-

SALMONN: ByteDance Presents a Model for Generic Hearing Ability for LLMs

-

Advances in Mind-Reading Powerful Technology: Decoding Speech from non-surgical brain recordings.