Consider a breakthrough that brings words to life, transforming text generation into an art form. They introduce a gentle absorbing condition, a bridge between the familiar and the unknown, in a world where words dance and stories come alive. They’ve accelerated the heart of text generation with it. Shansan Gong, Mukai Li, Jiangtao Feng, Zhiyong Wu, and Lingpeng Kong from The University of Hong Kong are involved in this research study.

Diffusion models are a common choice for producing high-quality text sequences. However, current approaches frequently model regular text as a continuous diffusion space, making training computationally intensive and resulting in delayed text production.

They introduce the concept of a “soft absorbing state,” in DiffuSeq-v2 which aids the diffusion model in learning to generate normal text by starting with a Gaussian space. This increases the model’s capacity to recognize specific patterns and details in text.

When it comes to text generation, they employ complex approaches such as ODE solvers in the continuous space. This considerably speeds up the text production process. Experiments show that their strategy speeds up training by around four times and text production by about 800 times while retaining similar text quality. This makes their strategy considerably more practical and applicable to real-world problems.

Prior models limitations

The issue of slow training and text generation speed in diffusion models is one noticeable restriction in the context of the presented information. While these models have demonstrated promise in a variety of text generation tasks, such as machine translation and summarization, they have historically suffered from sluggish convergence during the training phase and reduced generation speed, especially when Minimum Bayes Risk (MBR) decoding is used to improve text quality. This restriction can be a considerable disadvantage, particularly in real-world applications where efficiency is critical.

Furthermore, including complicated methodologies and external tools, may increase dependencies and complexities, thus making these models less user-friendly and replicable. Furthermore, the hyperparameter sensitivity, particularly in defining the ratio of absorbing states, can be difficult to optimize for certain workloads. It is critical to overcome these constraints in order to make diffusion models easier to use and understand for a broader range of text-generating applications.

Introduction to DiffuSeq-v2

They have proposed an accelerated version of DiffuSeq that speeds up both the training and text generation processes to bridge the gap between diffusion models and popular autoregressive models. To help the model learn faster, They implemented GPU acceleration techniques like FP16 and improved the training scheme. Gaussian space is a probability space of mean zero, real-valued Gaussian random variables.

They trained the diffusion model DiffuSeq-v2 to generate high-quality text in a single step without the need for MBR decoding, which saves time. In addition, They have used a cutting-edge ODE sampler called DPM-solver++ which will help in the fast generation of text and this also helps in reducing sampling steps to reduce the gap between continuous and discrete areas. ODE is an ordinary differential equation that is a differential equation (DE) dependent on only a single independent variable.

Future scope of DiffuSeq-v2

The future of DiffuSeq-v2 in text production shows enormous promise for more efficient, user-friendly ways. Researchers might concentrate on developing new training procedures to accelerate resolution and investigate alternatives depending on highly computational decoding strategies.

DiffuSeq-v2 in the creation of accessible tools and frameworks, as well as bridging the gap between continuous and discrete places, remains an attractive avenue. Extensive experimental validation and benchmarking will be required to assess developments and establish diffusion models as significant assets in a variety of language processing applications.

Research data and code availability

The research data and the research paper on DiffuSeq-v2 are available on Arxiv. The implementation code is also given on their GitHub. For anybody who is interested in this model and wants to know the implementation of this model, data is freely accessible to the public. This whole data is open-source.

Potential application DiffuSeq-v2

Advances in diffusion models for text generation, particularly in terms of faster training and production speeds, show great promise for a wide range of practical applications. One significant application is in real-time language translation. DiffuSeq-v2 might possibly provide near-instantaneous translation of spoken or written content across several languages by taking advantage of speedier text creation capabilities, facilitating more efficient and accessible communication on a global basis.

Another possible application is in the creation of content for web platforms. DiffuSeq-v2 can be used to quickly develop compelling and personalized content for websites, social media platforms, and e-commerce platforms with increased text-generating efficiency. This might improve user experiences, automate content creation processes, and expedite marketing efforts, helping businesses and online communities in the long run.

Continuous Diffusion Models

They presented Continuous Diffusion Models as strategies for manipulating and generating data in a smooth and gradual manner. Data is changed step by step in these models. To begin, the original data point is gradually transformed into random noise that follows a certain pattern known as a Gaussian distribution.

Each step of this transformation is controlled by a value that controls how much the data is altered. Following this transformation, the model attempts to reverse the process and return the data to its original form by learning from the changes that occurred during the forward process. This enables the production of equivalent new data to the original. When working with text, approaches such as Diffusion-LM and DiffuSeq apply particular algorithms to ensure accuracy and reduce errors.

Discrete Diffusion model

They work with discrete diffusion probabilistic models that use individual pieces of information known as “xt,” which are similar to labels for words or tokens in a vocabulary. Binary codes comprising 0s and 1s are used to represent these labels. Each label indicates which word or token is in use at any given time. Multinomial diffusion, for example, spreads randomization equally across all words or tokens in the vocabulary. D3PM approaches things differently by employing a unique strategy involving a transition matrix. It eventually transforms into something resembling a “point mass,” and it is highly likely to be in a specific condition.

Experimental Setup and Results

They attempted to answer two major research topics in their work. The first question was whether adding a soft absorption state to continuous diffusion models would improve text generation quality and speed up training convergence—the second question was about the effect of the DPM ODE solver on sampling speed and overall performance.

They selected the QQP dataset for paraphrasing sentences in their tests because it is lightweight and commonly utilized in Seq2Seq text diffusion models. They compared their method against baseline models such as DiffuSeq, BG-DiffuSeq, multinomial diffusion, D3PM-absorbing, and a reparameterized version of these models. They used DiffuSeq’s training, sampling, and assessment techniques, running their experiment on NVIDIA A100 80G GPUs.

Their key findings demonstrated that, even in the original sample version, their technique outperformed DiffuSeq without needing MBR decoding. This saved a lot of time when it came to writing high-quality texts. Even the sped-up version of their approach outperformed DiffuSeq and BG-DiffuSeq by a wide margin. In addition, they discovered advantages in terms of generation speed when compared to discrete diffusion models.

In terms of training speed, they used FP16 GPU acceleration to reduce total training time and accelerate training convergence in DiffuSeq-v2. Sampling performance was also greatly increased, particularly with the addition of DPM-solver++, making it roughly 800 times quicker than DiffuSeq while preserving text quality. Their ablation analysis confirmed the significance of the soft absorption condition and optimum hyperparameter selection for maximum performance.

Conclusion

They describe a simple yet effective training approach for joint discrete and continuous text diffusion models DiffuSeq-v2 that also resets some tokens into the soft absorbing state. The discrete noise bridges the training and sample steps, saving time in both, and the inserted DPM-solver++ speeds up the sampling even further. The diffuSeq-v2 method is fundamental to diffusion text generation and is parallel to many other techniques, such as self-conditioning and selecting tokens with varying importance which can also be used in diffuSeq-v2 to improve generation quality.

Reference

https://arxiv.org/pdf/2310.05793v1.pdf

https://github.com/Shark-NLP/DiffuSeq/tree/main/img

Similar Posts

-

LayoutPrompter: Automatic-layout generation using with Large Language Models

-

LOOGLE: Long-Context perceive extended phrases

-

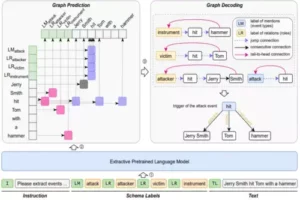

Mirror: An Ubiquitous Model for Information Extraction related Tasks

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

Microsoft Interns Revolutionize AI: LEMA the Error-Driven Learning

-

INT8 quantization: Large Language Models (LLMs) effects on CPU inference