Brace yourself to experience DreamCraft3D! Transforming 2D image into a unified, sharp, high-quality and fine-grained 3D objects. Encounter with 3D objects that are close to reality.

Artificial Intelligence enthusiast, Jingxiang Sun, Bo Zhang, Ruizhi Shao, Lizhen Wang from Tsinghua University and some the independent researchers were involved in this 3D content generation model. They created a model named DreamCraft3D.

Computer vision field will witness new dimension in their respective field, as the researchers exhibited the model that took a 2D image as a reference and through proper hierarchy turned it into a 3D object. Main focus of this model is to address geometrical modelling and texture composition. The subject matter of this model is consistency that was still there in the existing models. Diffusion model was used as a base model. They addresses the consistency.

The object created from DreamCraft3D is very close to reality and the output that are generated are at the highest degree of accuracy. The generated objects are consistent, precise, clear without compromising the texture and colour combination.

Related Studies and Background of 3D Content Generation

In the field of computer vision, 3D generative models has been the most researched field. All the models are presented with very captivating and exciting manuals. Different models such as Gererative adversarial networks (GANs), Auto regressive models and Diffusion Models has shown significant advancements for converting text or images to 3D content. Considering all these recent studies, 3D data training is much more complex compared to 2D data training.

Illustrating innovative interpretation in 3D-aware image generation offer 3D consistency to some extent but they rely on pre-trained 2D models showing unrealistic images in large views.

Pretrained CLIP models were enhanced to increase the similarity between the deliver images and prompt texts. Some researches has been done to improve the texture, improved distillation loss, converting 2D to 3D image, symmetry in shape but creating a consistent 3D image was still a challenge for researchers.

What is DreamCraft3D?

Achieving distinguishing results in 2D generative models made it easy to generate visual graphics. 3D models have also shown distinctive outcomes in few categories Whereas, this field is still facing some crucial challenges. Creating generalised 3D objects is still complex because of limited 3D dataset. Few researchers excel in training text-to-images generative models showing amazing outcomes.

DreamFusion suggested that pre trained text-to-image models can be used for 3D generation. DreamFusion acquires the resources of 2D generative model and produce high quality 3D results. To overcome ambiguity and lack of clarity, prior work was used with the step by step optimization strategies. This notion enhanced photo-realism. However, 2D generative models show inconsistencies when output is displayed.

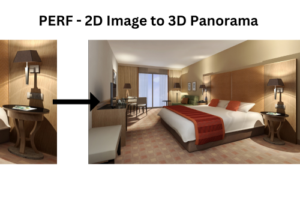

The above mentioned image shows that by lifting 2D images to 3D, DreamCraft3D achieves 3D generation with rich details and holistic 3D consistency.

DreamCraft3D presents complex 3D objects without compromising the 3D consistency. The approach of this model is based on pyramidal generation. The hierarchy of creating 3D object in this models is based on following steps:

- Generate high quality 2D reference images from a text prompt.

- Lift into 3D with different steps of geometry carving and texture enhancements

Following these steps create superior quality 3D objects.

The basic aim of geometry sculpting is to yield a consistent 3D image from a 2D reference image. Multiple strategies have also been introduced to improve geometrical consistency. Transition was done from implicit surface representation to mesh representation during the process of optimization for refinement in the geometry. These techniques help to produce sharp, clear, consistent geometrical effects.

Bootstrapped score distillation was also proposed to boost and enhance the texture of 3D object. Existing diffusion models were trained on very limited 3D dataset so diffusion models were finetuned for multi-view rendering. This technique ensures the view consistency in 2D texture. The diffusion model that was trained on improved multi-view rendering provides guidance for optimising the 3D texture. This “bootstraping” technique presented detailed texture keeping the view consistency.

As shown in the figure, this model produce creative 3D object with clear and precise geometry, consistent realistic texture that is systematic in 360◦. This model provide complexity and improved texture compared to optimization based approaches. Compared to other image-to-3D techniques this model produce high-resolution output in in 360◦ renderings.

A test benchmark was established that includes 300 images, which is a combination of real images and those produced by Stable Diffusion and Deep Floyd. Each image in this benchmark comes with an alpha mask for the foreground, a predicted depth map, and a text prompt. For real life images, the text prompts are sourced from an image caption model. This test benchmark is accessible to the public.

Accessibility

DreamCraft3D has the ability to generate unified, sharp and high-resolution 3D objects from a 2D reference image. The code of this proposed model is available on GitHub along with all the resources and example images. Practical demonstration is easily accessible for the user to run the model in real life.

This research can be easily accessed through Arxiv, including images. Demonstration of this model is available on YouTube. Whereas, the full implementation will be made publicly available soon.

3D content and animations are used in a diverse range of industry and applications. This new research can be utilized in virtual reality, film industry, video games, architecture, medical and health, product designing to show a real life imaginations and consistency in the specific environment.

Nuts and Bolts of DreamCraft3D

DreamFusion accessed text-to-3D generation by using pretrained text-to-image diffusion model before optimising the 3D representations.

DreamCraft3D takes advantage of a 2D image generated from the text prompt and uses it to guide the steps of texture enhancement and geometry sculpting. In the process of sculpting the geometry, the view conditioned diffusion model provides important 3D guidance to make sure the geometric consistency. This model then dedicatedly enhance the texture quality by conducting a cyclic optimization. Multi-view renderings were augmented and use them to finetune a diffusion model. DreamBooth was used that offer multi-view consistent gradients to maximize the quality.

Evaluation

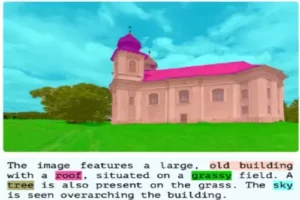

To generate 3D content that matches the input image and consistently conveys semantics from various perspectives, this technique was compared with established baselines using a quantitative analysis. Evaluation was done on four metrics: LPIPS and PSNR for fidelity measurement at the reference viewpoint; Contextual Distance for pixel-level congruence assessment; and CLIP score to estimate semantic coherence. Results depicted shows that this approach significantly surpasses the baselines in maintaining both texture consistency and fidelity.

While performing qualitative comparison all the text-to-3D methods suffer from multi-view consistency issues. While ProlificDreamer offers realistic textures, it fails to form a plausible 3D object. Image-to-3D methods like Make-it-3D create quality frontal views but struggle with geometry. Magic123, enhanced by Zero1-to-3, fares better in geometry regularization, but both generate overly smoothed textures and geometric details. In contrast, Bootstrapped Score Distillation method improves imagination diversity while maintaining semantic consistency.

he above image shows the qualitative comparison between different models. DreamCraft3D generates clear and sharp image in both texture and geometry.

Final Thoughts

DreamCraft3D is the most innovative approach in the field of computer vision by generating complex 3D objects from 2D reference image. This model produces high-quality and high-definition 3D images ensuring creative and diverse prospects in 3D content generation. It is believed that this study takes an important steps towards democratizing 3D content creation and shows great promise in future applications.

References

Similar Posts

-

UniRef++: A Unified Object Segmentation Model

-

Monkey: A model for text generation from high-resolution image

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

GLaMM: Text Generation from Image through Pixel Grounding Large Multimodal Model

-

RegionSpot: Identify and validate the object from images

-

PERF: Nanyang Technological University Presents a Revolutionary Approach to 3D Experiences with Single Panoramas