See the future of robotics like you’ve never felt before! Prepare for an amazing trip into the very core of artificial intelligence. Robots come to life with GRID, gaining knowledge, flexibility, and an element of creativity. Prepare to be amazed by the magic of human-like creative thinking constructed into every robot. Sai Vemprala, Shuhang Chen, Abhinav Shukla, Dinesh Narayanan, and Ashish Kapoor are the researchers involved in the GRID study.

Robot and self-regulating intelligence development can be costly and it will be time-consuming. Work in current situations there are many specialized robots for a specific task which makes it difficult to apply them on a large scale. Moreover, the training data availability is also limited and it also complicates the use of deep learning in these Robot systems.

In order to address these above-mentioned challenges the researchers introduced the General Robot Intelligence Development GRID platform. Robots can use a framework to gain knowledge, produce, and customize skills according to their physical capabilities, limitations in the environment, and goals. This platform solves AI difficulties in robotics by using base models that understand the real environment.

GRID has been built from the bottom so that it can adapt to a wide range of robots, automobiles, hardware configurations, and software communication techniques. The modular structure enables easy integration of several deep machine learning modules and existing fundamental models, allowing them to be used to address a broad spectrum of robot-related issues.

They demonstrate the platform’s capabilities in a variety of flying robotics scenarios, demonstrating how GRID significantly accelerates the creation of intelligent robotic devices.

Related Prior Work and Their Limitations

In Composing Pretrained Models with LLMs there is the limitation of integrated tools and external models in large language models. To customize LLMs with particular instructions and APIs, many ways have been examined, including Toolformer, Gorilla, Socratic Models, VisProg, and others. These strategies, yet, are specific to the task and lack the ability to be general.

Many people are interested in representation learning, and many of them are relevant to robotics. Control examples include SayCan, RoboAgent, VC-1, R3M, VIP, COMPASS, and PVR, among many others. Approaches to task learning in robotics can be divided into three categories. The first strategy is task-specific training, in which modules are created for each task. Such approaches have been developed for a variety of common robotic tasks, including visual localization, mapping, path planning, and control. Another category includes multi-task learning techniques, in which models are taught collaboratively to solve many tasks. Finally, there is a class of approaches that perform work-agnostic pre-training on representations that can then be fine-tuned for a specific job.

There is a need for a large amount of datasets for robotic and automatic systems. These datasets cover a large range of scenarios which include automated driving, manipulation, and many more things.

The importance of multimodal representation is increasing day by day, especially for tasks like manipulation, location checking, and human-robot. In order to combine the data from many sensors is difficult to understand.

Transformers that are built-in into the robots are originally designed for language processing. In robots, its application is for tasks like trajectory motion, planning, and reinforcement learning for multi-task learning.

Introduction about GRID

In order to overcome all the previous limitations the researchers proposed a general solution called general robot intelligence. This is a robot’s ability to learn from the environment, combine all the learnings, and adapt them which fit its physical capabilities, and environment, and in order to achieve this needs modular, flexible, and adaptable robot intelligence. So that they can apply across a large range of robotics setups, sensor arrays, and environments which makes the development of machine intelligence more easily accessible.

In addition, this study offers the concept of Foundation Models, which act as the foundation of General Robot Intelligence. However, due to a lack of data sensor variation, having to for edge processing, and safety issues, developing these models for robotics is particularly difficult. The GRID platform presents an actionable strategy to address these difficulties, using a novel idea called the Foundation Mosaic to handle data shortages and utilizing Foundation Models for various robotic activities.

The goal of GRID is to break free from the limits of dependence on application intelligence and bring in a new era of flexible and adaptive robot intelligence.

Future scope of GRID

The future scope includes the advanced capabilities of robots in real-world environments and the feedback and new techniques such as reinforcement learning and fine-tuning models. This model paves the way for making high-level robotic intelligence this model has increased the safety of the robots in real-world environments. This field will also be used in protecting environmental security issues and developing intelligence and there is a wide range of applications of this model.

Research paper and Code accessibility

The research paper on this model is available on Arxiv. The implementation code of this robotic system is also open source and accessible on GitHub. These sources are public and anyone can use them for their own studies.

Potential of GRID in different fields

The potential application of GRID includes many fields. These robots could be used for jobs like driverless delivery, disaster recovery, and dangerous substance handling. In healthcare, these techniques can help with surgeries, recovery, or patient care, where accuracy and safety are important. Robots can become vital resources in healthcare settings through the use of GRID approaches.

This model has a wide range of applications, including autopilots and drones that can benefit from training and fine-tuning. This strategy can improve their safety and decision-making abilities.

Working of GRID

The main aim of GRID is to make Robotics more accessible to researchers, developers, and organizations. The typical limitations in the past model are the requirement of specialized knowledge, high cost, data collection, and model training has difficulties in making robots. To overcome all these challenges this is a modular architecture that will integrate model data, foundation model, and large language model the inspiration is drawn by how models like GPT-4 help to accelerate software development.

The architecture and framework of GRID is user friendly. GRID proposes accelerating innovation and broadening involvement with the creation of intelligent, adaptive, and accountable robotic systems.

Model Architecture:

GRID creates code with suitable Machine Learning modules that accomplish the goal. The robot foundation models serve as the machine’s core and is important to GRID intelligence, which will allow the computer to make non-myopic judgments in the case of an unpredicted situation. The second component is the lack of data in robotic domains makes it non-trivial; thus, their architecture is based on both high-fidelity and low-fidelity as well as a platform for collecting real-world data.

GRID can orchestrate a collection of Foundation Models and other tools to tackle complicated robotics tasks utilizing simulation as well as real-world data. The orchestrator interacts with the robot, the world, and the models via APIs to generate a deployable program with embedded ML that solves the required goal.

Having access to high-fidelity additionally means that they can benefit from unique mechanisms for learning such as simulation feedback in pre-training, deduction, and fine-tuning. Finally, the safe and successful implementation of robot intelligence in the actual world is critical, and GRID wants to build on recent advancements in this area.

Task-specific AI models gave way to larger, more adaptable models. In language processing, such as GPT-4 can perform tasks such as translation and summary of texts. Visual tasks are seeing similar improvements. This means that a single, adaptable model can replace a number of task-specific models.

However, transferring these concepts from language processing to robotics is difficult. There is not enough information for robot training. As a result, current models must be used, even if they weren’t intended for robots.

A representation of the Foundation Mosaic. To tackle the defined problem, the Orchestrator employs an LLM to intelligently compose a set of accessible models and the sensors of the robot functioning in the environment task. The models generate language-grounded representation summaries or features/tokens in a multimodal representation space shared by all.

This data is used by the LLM. To develop and execute code in a feedback loop that causes the robot to do actions in the environment. The stumbling block of the Foundation’s Mosaic is the capacity to effortlessly exploit domain-specific data from large-scale datasets. While developing a language-based understanding of the environment, use Foundation Models.

Robots have different components and control methods in robotics. A component fit for all solutions does not work. GRID uses a method known as Foundation Mosaic, which integrates various models. They collaborate using a Large Language Model (LLM) to help robots recognize their environment.

This Mosaic is similar to a network of neurons. It can be changed to improve its performance. It mixes many models in order to make robots intelligent. However, getting these models to function together is difficult, and GRID relies on huge language models to assist with this.

Data: Multimodality and Scarcity

Because of the wide range of sensory input needed, robotics presents different obstacles. This information is simply text and images; it also includes touch feedback, depth maps, temperature readings, and LiDAR data. All of these data are necessary for robots to make advanced choices.

Combining these data formats is difficult. A camera gathers images at a different rate than a motion sensor. Coordinating these various data kinds in time is critical for the robot to function properly.

There is an absence of large datasets in robotics. In opposition to domains such as natural language processing and computer vision, collecting data for robots is costly and limited contexts.

To solve these issues, GRID employs AirGen, a highly realistic simulation platform. It can simulate a variety of situations, allowing for a wide range of training scenarios. It can also create multimodal data for training purposes. Simulation a neural scene restoration and generative AI, enables the generation of more realistic data.

Learning, Inference, Fine-Tuning, and Safety:

Moving from lab settings to unrealistic real-world environments raises obstacles in robotics. Simulated environments provide an appropriate environment for robots to learn and develop their behaviors. This simulated feedback is essential for helping robots in developing complicated reasoning.

A Large Language Model (LLM) is given to robotic tasks to accomplish in this method. The code created by the LLM may be incorrect. It is used in a simulation environment to get immediate input on its performance. This feedback allows the LLM to continuously refine its code until it successfully resolves the problem.

This also involves fine-tuning the LLM using direct feedback. It improves safety and alignment, guaranteeing that when implemented in the real world, robots behave safely.

Individual foundation models do not always meet the individual robotics task. These models are fine-tuned using simulation input, making them more suitable.

In robots, safety is a major problem. Foundation models trained on an enormous scale must be used with care. GRID enables the testing and trialing of new ideas in a monitored setting, assisting in the understanding of potential failure modes.

Conclusion

GRID is a revolutionary approach to instilling intelligence in robotics and autonomous systems. The fundamental concept is to build on recent breakthroughs in representation learning and foundation models to provide a framework that can considerably minimize the effort required by roboticists and machine learning developers. Capability to coordinate interactions between diverse

ML techniques are enabled by representations, robot foundation models, simulation, and real-world operations. Such as simulation feedback, which is novel and will lower the barrier to entry into robots. GRID is a developing platform, and we intend to accommodate a variety of form factors, such as robotic arms.

References

https://github.com/ScaledFoundations/GRID-playground

https://arxiv.org/pdf/2310.00887v2.pdf

Similar Posts

-

UniRef++: A Unified Object Segmentation Model

-

Monkey: A model for text generation from high-resolution image

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

GLaMM: Text Generation from Image through Pixel Grounding Large Multimodal Model

-

RegionSpot: Identify and validate the object from images

-

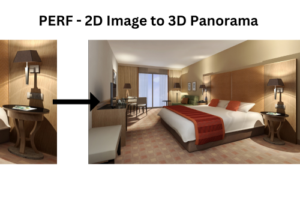

PERF: Nanyang Technological University Presents a Revolutionary Approach to 3D Experiences with Single Panoramas