Prepare to be surprised as HyperFields surpasses all expectations! See how text can be injected into beautiful 3D environments. See the breathtaking power where words create lively, dynamic environments. Accept the future of imagination, where HyperFields will make your dreams a reality. Sudarshan Babu, Richard Liu, Avery Zhou, Michael Maire, Greg Shakhnarovich, and Rana Hanocka from Toyota Technological Institute at Chicago and the University of Chicago are involved in this research study.

Researchers created a method called HyperFields for the generation of 3D scenes using text as input, with the option to modify it. To translate written descriptions into 3D scenes, they use a dynamic hypernetwork. Researchers use NeRF distillation to combine many scenes into a single network, making it adaptable.

A neural network can generate different 100 scenes. HyperFields can manage both similar and new scenes, whether they’ve been viewed previously or not. Fine-tuning is faster and more efficient and can produce new scenes up to 10X faster. Their ablation tests reveal that the dynamic architecture and NeRF distillation are both important for HyperField.

Related Studies and Background of HyperFields

Three key areas in the prior works are neural radiance fields, 3D synthesis, and the use of hypernetworks.

Neural Radiance Fields for 3D Representation

Point clouds, meshes, voxels, and signed-distance fields are some of the features used in 3D generative modeling. This study is on Neural Radiance Fields (NeRFs) which is a way of describing 3D scenes. NeRFs had been developed for multi-view restoration, but they have found use in photo editing, 3D surface removal, and large-scale 3D modeling.

Score-Based 3D Generation

Some systems try to understand the distribution of 3D models, while others depend on 2D images. This involves substituting the original optical loss with a guidance loss, which can often be helped by text or 2D diffusion models. They are among the researchers who use score-based variations from StableDiffusion to construct NeRF models.

Hypertextual networks

Hypernetworks are networks that create weights for networks. They are using dynamic hypernetworks to generate NeRF weights based on the stimulation of NeRF layers. Researchers learned the mapping between text and NeRFs using this hypertextual network.

Basics about HyperFields

The latest developments in images to text have generated interest in accomplishing success in the generation of 3D scenes from text. This interest has developed with the popularity of Neural Radiance Fields (NeRFs).

The majority of methods for producing 3D sceneries from text require complex modifications for each explanation, which makes the process slow and difficult. They offer a solution neural network hypernetwork. A single hypernetwork is trained to create weights for NeRF networks. This hypernetwork, once trained, can generate NeRF weights for new text suggestions with few modifications, which results in faster and universal scene production.

The HyperFields solves training challenges based on specific design decisions. They predict NeRF network weights using previous network activations for future NeRF weight prediction. They also introduce NeRF distillation, which uses instructor NeRFs to give accurate monitoring, stable training, and high-quality scene production.

HyperFields may create scenes in just one motion forward and quickly adjust to new, unfamiliar contexts with a few fine-tuning steps. The success of their strategy indicates that the changing hypernetworks and NeRF distillation are critical to its performance.

Research material and Implementation Code Availability

The research paper on this model is available on Arxiv. The code of this model is also open source and easily available for all the people on GitHub.

Potential Fields of HyperFields

HyperFields has potential applications in graphics and 3D content generation. Application of this model also involves the creation of 3D content generation for the entertainment industry. HyperFields may be used by film, animation, and game studios to rapidly build 3D scenes, characters, and objects by text, by eliminating the need for time in modeling. This model has the potential in content development, permitting artists to devote their time to creativity.

The out-of-distribution resolution capabilities have interesting consequences such as architecture and interior design. HyperFields could enable architects and designers to quickly imagine numerous designs and setups. This could enhance partnership and making choices during the design process. HyperFields has the potential to transform 3D content development and design in industries by providing an efficient approach to building 3D models.

Methodology of HyperFields

The dynamic hypernetwork architecture and NeRF distillation learning are the two primary developments in their method. These are the main elements of this study.

Hyperdynamic Network:

The dynamic hypernetwork is made up of a Transformer and MLP modules. It takes a text encoded with a pretrained BERT model as input and runs it by means of a Transformer to generate a conditioning token (CT) that contains scene information. Each MLP module receives stimulation from the previous layer and is in charge of creating parameters. The hypernetwork may generate unique NeRF MLP weights for every single 3D point and looking direction combination.

The HyperFields system receives a text prompt that has been encoded by a text encoder. The text latents are put into a Transformer module, which generates a conditioning token. This conditioning token is used to put conditions on each of the hypernetwork’s MLP modules. The initial hypernetwork MLP anticipates the weights of the NeRF MLP’s first layer. The activations from the first anticipated NeRF, are then fed into the second hypernetwork MLP.

MLP layer, and forecasts the weights of the NeRF MLP’s second layer. The activations from scene-conditioned hypernetwork MLPs follow the same pattern. To generate weights, use the previously forecasted NeRF MLP layer as input.

Distillation by NeRF:

They first train distinct NeRF models for various texts, similar to how DreamFusion does it. The HyperFields architecture is then trained applying guidance from these single-scene NeRFs. They prompt and camera perspectives for every iteration, render images using the pretrained instructor NeRFs, and the HyperFields network and render images from camera viewpoints. The distillation loss is the difference in visual output between the instructor’s NeRFs and HyperFields. This distillation process helps HyperFields fit many text prompts and learn broad text-to-NeRF mapping.

To overcome range prejudice, they used a multiresolution hash grid and sinusoidal encodings in their approach. The NeRF MLP is made up of six layers with predicted weights, while the dynamic hypernetwork MLP components are made up of two layers with ReLU non-linearities. To stabilize training, the design normalization and a stop gradient.

Experimentation Results of HyperFields

Researchers evaluate HyperFields on many evaluations and experiments by looking at generalization, out-of-distribution convergence, amortization benefits, and ablations:

Generalization of In-Distribution

During training, their model can acquire knowledge from a subset of color-shape combinations and then construct unseen color-shape situations zero-shot during inference. They train on different shapes and colors and restrict particular color-shape combinations. The model may then construct scenes to the training scenarios for these held-out combinations. This highlights their model’s in-distribution generalization capacity, which allows it to expand to color and shape combinations.

Out-of-Distribution Merging Has Accelerated

Researchers investigate HyperFields’ ability to produce shapes as well as features that were not seen during training, known as out-of-distribution inference. When compared to DreamFusion, this model can fine-tune these invisible events, resulting in quicker convergence. Even after extended training, DreamFusion models have difficulty achieving the quality of this model’s out-of-distribution scene production, demonstrating that HyperFields responds better to such scenarios. Researchers gain a 5x speedup in convergence on average.

The amortization Advantages

They show how pre-training HyperFields and NeRFs costs can be easily reduced. Their method has a relatively low overhead of two hours, which is responded to by the potential of producing undiscovered combinations in a forward way. In comparison DreamFusion takes longer to generate each test scene, thus even with the distillation overhead, this model is an order of magnitude faster. This speed is useful for speedy scene generation.

Ablations:

They emphasize the importance of using stimulation from the previous layer in creating successive weights for the NeRF in their hypernetwork design. Their model’s capacity to accommodate many scenes is greatly decreased without this training, and it fails to tell the difference between identical styles. They show that depending on DreamFusion’s Score Distillation Sampling (SDS) can cause mode breakdown and interfere with the hypernetwork’s emotion, making it difficult to generate individual geometry for different circumstances.

Final Thoughts

HyperFields is a framework for text-to-NeRF generation that may generate unique NeRF networks in a single run. Their findings indicate learning an overall way to represent semantic scenes. Their innovative hypernetwork architecture with NeRF distillation, develops a successful translation of text token inputs into NeRF latent space. Their tests show that they can incorporate over 100 scenes into one model and predict good quality unseen NeRFs whether zero-shot or with a few fine-tuning steps. When compared to previous work, their ability to train on many scenes significantly speeds up the merging of scenes.

References

https://arxiv.org/pdf/2310.17075.pdf

https://github.com/threedle/hyperfields

Similar Posts

-

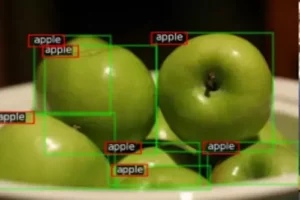

UniRef++: A Unified Object Segmentation Model

-

Monkey: A model for text generation from high-resolution image

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

GLaMM: Text Generation from Image through Pixel Grounding Large Multimodal Model

-

RegionSpot: Identify and validate the object from images

-

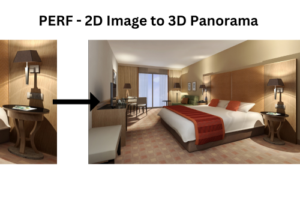

PERF: Nanyang Technological University Presents a Revolutionary Approach to 3D Experiences with Single Panoramas