Prepare to get inspired, and astounded! In the Blizzard Challenge 2023, discover the magic of the IMS Toucan System which converts text to audio according to the given Voice accent and voice speaker. See how innovation breaks down obstacles and produces an arrangement of progress. Florian Lux, Julia Koch, Sarina Meyer, Thomas Bott, Nadja Schauffler, Pavel Denisov, Antje Schweitzer, and Ngoc Thang Vu from the University of Stuttgart, Germany are involved in this study.

They have made improvements for entry in the Blizzard Challenge 2023 when compared to their submission in the previous Blizzard Challenge 2021. The approach they use includes a rule-based system for translating text into audio, with solving homograph difficulties in the French language. They use a rapid and effective non-autoregressive generation architecture to convert these sounds into spectrograms as intermediate representations.

This Toucan model will generate text to audio according to the given input of what will be the accent of language and what will be the voice of the speaker.

Prior Failed Designs

In the prior model, there were some components which are removed in this model include adversarial feedback, word and sentence embeddings, Variational Variance Predictors, and Enhancing Speech Quality discussed below:

In Adversarial Feedback they wanted if the difference in genuineness between complete models and various other systems may be related to how they offer feedback. In the text to audio process, they tested adding an indicator that learns to distinguish between genuine and produced spectrograms. They chose not to use this strategy in their final submission after completing some private A/B testing with no further improvement.

Word and Sentence Embeddings: They additionally experimented with applying word and phrase-embedded data cues for their text to audio system. Sentence embeddings preserve the meaning and structure of a sentence, whereas word placements provide information about the meaning and importance of individual words. These embeddings can help in generating more natural-sounding speech, but their tests showed no benefits. As a result, they elected not to put them in their final submission.

Variational Variance Predictors

Researchers concentrated the Toucan model on optimizing the pitch, length, and energy variations to make the generated speech sound more realistic. They experimented with various generative methods. They first considered employing a variational autoencoder (VAE), this proved difficult due to the 1D nature of the data, making it difficult to build an effective information bottleneck. They then attempted to produce these variations using a Generative Adversarial Network (GAN), when they utilized the Wasserstein distance as a cost function.

Improving Speech Quality

They created a speech augmentation model for speaker AD while keeping speaker NEB’s voice unchanged. They looked for a speech enhancement model that might decrease echo from the NEB dataset while enhancing the quality of voice recordings taken with different microphones. They tried VoiceFixer to accomplish this, but the outcomes were not sufficiently convincing for them to continue. They believe that a free speech restoration model with equal performance to models might considerably improve their system’s sound quality.

Introduction to Improved IMS Toucan System

The 2023 Blizzard Challenge focuses on creating natural speech in French using two datasets of female French speakers. There are two assignments in this competition: the “hub task” and the “spoke task.”

Participants in the hub challenge have to build a high-quality French speech system utilizing publically available materials and models. The spoke challenge does not have any of these constraints, but the goal is to produce speech that resembles the speaker from the related dataset without losing naturalness. This is a difficult problem, especially since the spoke task includes fewer data than requirements for text to audio datasets.

English speakers output audio in different languages:

Both the hub and spoke tasks utilize single-speaker French datasets from several native French speakers in France. The hub task dataset contains five LibriVox audiobooks totaling 289 chapters and 51 hours of speech by Nadine Eckert-Boulet (NEB). The spoke task dataset has 2 hours of speech by speaker Aurélie Derbier (AD), with 60% of the utterances coming from various books and transcripts.

The competition organizers performed hearing tests to assess system performance. Speech quality and speaker were assessed using mean opinion scores (MOS) for speech generated from book sentences. A single readability test required reviewers to write down a single phrase in the hub task, and homograph pronunciation accuracy was also tested. These listening tests included native speakers, speech professionals, and naive listeners.

The researchers in the Toucan model built on their before proposal to the Blizzard Challenge in 2021 to improve the system. Several ideas were introduced regarding multilingual, management, and low-resource settings. The researchers combined these ideas into a system called ToucanTTS.

Research paper and Code Availability

The research paper on this model is available on Arxiv. The code is also open source and available on GitHub.

Potential Fields of Improved IMS Toucan System

The data and techniques given in this research study have a wide range of applications in natural voice generation and language processing. The system’s robustness and data efficiency, in handling unknown phonemes, make it good for the development of real-time, voice-controlled products. It allows users to customize voice output to specific needs or tastes, improving the user experience.

It may be related to language processing to help with correct text pronunciation and phonetic transcription in many sectors such as education, language learning apps, and automated voice responses. The capacity of this system for homographs efficiently demonstrates its importance for enhancing the accuracy of tasks involving natural language processing in multiple languages, even when broad linguistic knowledge exists, resulting in more precise and contextually conscious text-to-speech conversion.

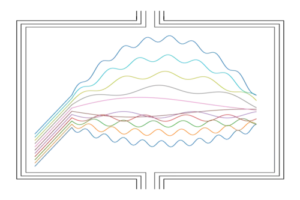

The architecture of IMS Toucan System 2023

As an important goal, it focuses on data efficiency. To make it simpler for the model to learn, they divide the generation process into parts. This method eliminates the requirement for a large number of parameters, which reduces the amount of data. This strategy has the advantage of being successful, but it may compromise on quality and naturalness.

Text-to-Phoneme:

They use an open-source phonemicized using speaking as its backend to convert text into phonemes. They use the IPA notation to convert the input into phonemes. These phonemes are expressed as articulatory vectors. Each sound corresponds to a vector that encodes the human vocal tract during sound generation.

Spectrogram-to-Alignment

To compute pitch and energy values, their Toucan model’s method relies on exact phoneme-to-spectrogram alignments. To get the correct alignments, they train a voice recognition system with the CTC target. They then refine boundaries using an auxiliary spectrogram reconstruction model. This component guarantees that the phoneme borders are browned. they rank phoneme likelihoods by transcription order and apply a monotonic alignment search (MAS), which proves useful in maintaining sequence order.

Spectrogram-to-Embedding

In Toucan they use the Global Style Token embedding, paired with additional proposed AdaSpeech modifications, to record acoustic situations and speaking styles in a data-efficient manner. This style and condition data a large number of style tokens, increasing versatility.

Phoneme-to-Spectrogram

Their spectrogram production is built on the FastSpeech 2, which has been enhanced with FastPitch’s phoneme-wise pitch and energy balancing. This combination provides precise oversight over generated speech. They use the Conformer architecture as an encoder and decoder, for its performance in speech-related applications.

Spectrogram-to-Wave

In Toucan they use a generative adversarial network (GAN) architecture for spectrogram-to-waveform conversion, incorporating the BigVGAN generator with barriers from MelGAN, HiFiGAN, and Avocodo. Based on insights from the 2021 Blizzard Challenge winner’s study, they employ 16kHz spectrograms for easier production, then apply super-resolution to produce 24kHz waveforms. This procedure results in the finalized audio output.

Data Preprocessing and Traning of Improved IMS Toucan System

The audio files were separated at paragraph boundaries in their data preparation, guided by specified alignments. They prolonged certain utterances by merging consecutive sentences with brief 0.22-second gaps in between to ensure that sentence transitions inside utterances maintained natural prosody.

Although this strategy may be useful for longer audio segments, it was implemented particularly for the test data, without compromising the evaluation procedure. Each freshly formed “joint utterance” was connected with the ones that came after it, guaranteeing that the total time did not exceed 15 seconds. This technique resulted in 1640 joint utterances for AD and 7967 for NEB, each with up to 5 and 6 following phrases.

In signal processing, the training data in the Toucan model was subjected to loudness normalization using the Pyloudnorm program to achieve a standard level of -30 dB. They modified their system’s output during inference to match the volume of the human reference recordings. They used the Adobe Podcast Enhance tool on the AD dataset to fix artifacts and reverb to improve speech quality.

Dutch accent audio generation in different languages:

For the NEB dataset to conform to challenge criteria, this augmentation step was eliminated. Despite examining open-source alternatives for upgrading the NEB data, they were unable to obtain sufficient results, so they proceeded with the unaltered NEB data.

In terms of prosody representation, their method specifically represented it using phone durations, pitch, and energy values per phone. An aligner was used to determine durations, and the praat-parselmouth program was used to extract pitch and energy values. Pitch and energy parameters were averaged per phone. Quiet phones and symbols were distinguished by reducing their pitch and energy values to zero. Pitch and energy levels were standardized based on the mean per utterance, eliminating zero values, to guarantee that the syntax curves were independent of the speaker.

They used a rule-based disambiguation technique to find and fix homographs in order to address homograph resolution. This entailed creating a lexicon of 800 French homographs, their phonetic transcriptions, and POS tags. Where distinct pronunciations had the same POS tag, they created more nuanced POS tags that took morphological information into account, allowing them to differentiate between meanings. They tokenized and POS-tagged the input text and chose the proper homograph pronunciation.

They used specific characters as heuristic markers for pauses for the annotation of silence, and to ensure their validity, they used durations from the aligner and a voice activity detection tool, successfully verifying that each stop marker correlated to a genuinely silent segment in the audio.

Finally, the data cleaning method is intended to improve training data quality by identifying and deleting samples with large losses. These mispronunciations and unwanted noise, such as coughing or background noise, ensured that the dataset utilized for training was of the greatest quality.

Results and Conclusion of Improved IMS Toucan System

Their performance in the hub and spoke tasks revealed many strengths and limitations. Their system performed among the top five in the hub task, especially when it came to homograph disambiguation, with an accuracy of 84%. This suggests a rule-based system based on proficiency in languages can handle the task properly.

Their system has several advantages: it can handle new phonemes due to its features, it has high management due to FastPitch style pitch and energy averaging, it operates quickly due to a parallel conformer as encoder and decoder, and it is strong as a non-autoregressive decoder. Because of deterministic pitch, energy, and duration predictions, it has limitations in overall prosody naturalness.

The two-step synthesis procedure, which uses spectrograms as intermediate representations, saves data but is less natural than fully end-to-end systems. In future generations, they intend to overcome these problems by introducing stochastic predictors for speech signal variance and neural audio codecs to substitute spectrograms while keeping data economy. Despite receiving lower naturalness ratings in the challenge, their system’s speed, data efficiency, resilience, and controllability continue to be useful in TTS.

Reference

https://github.com/digitalphonetics/ims-toucan

https://arxiv.org/pdf/2310.17499v1.pdf

Similar Posts

-

Qwen-Audio: A unified Multi-Tasking Audio-Language Model

-

Mustango: Text-to-Music Generation Model

-

LLVC: Voice transition with Minimal response time in synchronized manners on Hardware

-

University of Stuttgart Present IMS Toucan Improved System in Blizzard Challenge 2023

-

SALMONN: ByteDance Presents a Model for Generic Hearing Ability for LLMs

-

Advances in Mind-Reading Powerful Technology: Decoding Speech from non-surgical brain recordings.