Step into a universe where language gives images life, where simply words conduct an arrangement of visual supernatural events. Recent advances have released the power of text-controlled generative diffusion models, bridging the gap between the spoken word and amazing, varied pictures of unmatched quality. Yulu Gan, Sungwoo Park, Alexander Schubert, Anthony Philippakis, and Ahmed M. Alaa are the researchers involved in this research study.

In this study, they provide a novel approach named as InstructCV that uses natural language instructions to simplify the execution of different computer vision tasks. They created a universal language interface that eliminates the requirement for task-specific design decisions. This interface enables tasks to be completed by responding to natural language questions.

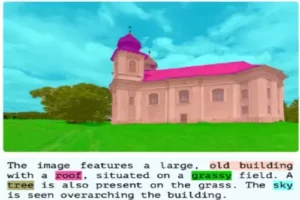

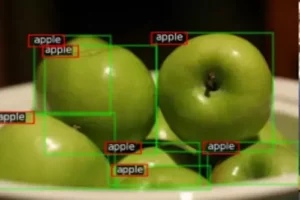

Several computer vision tasks are treated as text-to-image generation challenges in their way. The provided text acts as an instruction detailing the task in this context, and the produced image provides a visual depiction of the task’s outcome. To train their model, they assembled a collection of widely used computer vision datasets covering a wide range of tasks such as segmentation, object identification, depth estimation, and classification.

Prior models limitations

Previous work in text-to-image models has been highly successful in picture synthesis, but these models were not utilized as an integrated foundation for basic visual recognition tasks. Instead, new structures and loss functions are created for each specific task, missing out on the possibility of constructing generalized solutions across a wide range of problem domains and data types.

Methods such as Unified-IO and Pix2Seq-v2 use quick changing to create single architectures capable of executing numerous vision tasks. However, because of the sequence-based functioning of these models, inference speeds are slow, and the specific prompts may not generalize to previously unknown datasets/categories.

Prompt tuning in conjunction with sequence-to-sequence architectures has frequently been used in efforts to construct unified models for computer vision problems. To represent both input images and task-specific prompts, these models employ sequences of discrete tokens. While effective, this strategy has interpretability and generalization limits.

Existing unified models frequently include task-specific prompts based on specific training datasets. As a result, their ability to generalize to new datasets, tasks, or categories is limited. In other words, these models may struggle to adapt to different or unknown data settings.

Previous models’ task-specific prompts often involved numerical numbers, making them harder to understand and adaptable to different sorts of work. When presented with new difficulties or domains, this lack of clarity can limit the flexibility of these models.

Introduction about InstructCV

This work presents a single, adaptable computer vision model called “InstructCV.” Using natural language instructions, InstructCV can perform a variety of computer vision tasks. It understands textual instructions and generates visual outputs depending on input images. In this way, InstructCV establishes a standard language interface for various vision tasks.

They use a two-step process to train InstructCV. They begin by fine-tuning an existing conditional diffusion model termed “Stable Diffusion” utilizing instruction-related data. This data is derived from the combination of many computer vision datasets encompassing tasks such as segmentation, object detection, depth estimation, and classification. They also rewrite the instructions for each activity using a broad language model to ensure they are broad and deep in meaning. With this dataset together, they adjust the “InstructPix2Pix” text-to-image diffusion model to create an instruction-guided, multi-task vision converter.

In their trials, InstructCV outperforms competing models built for general vision tasks as well as those optimized for specific tasks. Notably, InstructCV excels at adapting to new instructions and performing well on previously unknown datasets and tasks, notably in the field of open-vocabulary task segmentation. InstructCV is a complex and flexible model that can understand and execute numerous computer vision tasks using plain language instructions, making it a potential solution in the field of computer vision.

Scope of InstructCV in future years

The study’s findings pave the way for intriguing future possibilities in the fields of computer vision and natural language processing. The InstructCV model’s ability to generalize across diverse tasks, new user instructions, and previously unseen data suggests potential applications in fields such as autonomous robotics, medical imaging, and augmented reality, where intuitive, language-driven task execution can benefit dynamic interaction between humans and machines. More studies might look into expanding InstructCV, improving its speed, and applying it to real-world applications, bringing us closer to continuous human-computer interaction and communication in visual activities.

Research paper, Demo, and Code accessibility

The research paper and more in-depth details about this study are available on Arxiv. The demo of this model for real-time use is also available on the hugging face. The implementation code of this study is also freely available for everyone to use on GitHub. These resources are open-source and freely available.

Potential application of InstructCV

The InstructCV concept has the potential to transform a variety of industries, including healthcare and medical imaging. It could help doctors and radiologists diagnose problems more precisely and efficiently in the medical field. By providing natural language instructions for specific regions of interest in medical photographs, healthcare providers can speed up the diagnosis process, allowing them to more correctly identify anomalies, tumors, or lesions. Furthermore, InstructCV could provide real-time visual instruction to doctors during surgical procedures, easing complex surgeries and increasing patient outcomes.

In the field of education and e-learning, InstructCV has the ability to generate dynamic and engaging learning experiences. Students can interact with educational resources using language and obtain extensive explanations and visualizations of complex ideas. By producing graphics and diagrams from textual lesson plans, this technology can enable instructors to create interactive and visually appealing information. It has the ability to make education more engaging and accessible by bridging the gap between students and educators through intuitive, language-driven access to visual resources.

Framework of InstructCV

The InstructCV framework is divided into two steps: (a) establishing an adaptable dataset that comprises text instructions and visual task data, and (b) enhancing an existing computer model using the dataset established in step (a). These processes are explained in full below.

Step 1: Develop a Multi-Modal and Multi-Task Instruction-Tuning Dataset

They collect data from four well-known computer vision datasets MS-COCO, ADE20K, Oxford-III-Pets, and NYUv2 which cover four different vision tasks: semantic segmentation, object detection, monocular depth estimation, and classification in this stage. This data comprises a multi-task dataset, which includes input images, task outputs, and task IDs. The task IDs help in the differentiation of the vision task.

They turn this dataset into a multi-modal instruction-tuning dataset, designated as DI, to make it fit for instruction tuning. Each training data point in this new dataset is paired with a task identifier stated as a natural language instruction, and the label is visually provided in a format suitable for the individual task.

LLM-Based Instruction Generation: For each vision task, they begin by selecting prompt templates, such as “Segment the %category%” for semantic segmentation. The category information is then entered into the template, “Segment the cat.” The instruction-tuning dataset is divided into two versions: one that utilizes fixed prompts (FP), which are typical task-specific prompts, and another that uses a language model to rewrite the messages, resulting in a more expanded set of instructions.

Visual Encoding of Task Outputs: For all tasks, they convert the target labels into a visual format that suits the input image space. Using Pix2Pix architectures, this technique allows all tasks to be treated in a unified text-to-image generating framework.

Step 2: Tuning a Latent Diffusion Model Using Instructions

In this stage, they use the instruction-tuning dataset to train a conditional diffusion model. This approach applies the vision task indicated in the instruction to the input image, resulting in a visually encoded task output. The training approach transforms the model from a generative picture synthesizer to a multi-task vision learner driven by language.

Classifier-Free Guidance: The model utilizes “classifier-free” guidance to improve the relationship between the generated outputs and both image and text. This method combines unconditional and conditional noise variables and modifies the probability distribution to focus on data where a hidden classifier provides high scores for conditioning.

In short, the InstructCV methodology entails constructing a versatile dataset with verbal instructions and visual data and then using this dataset to fine-tune a diffusion model. The goal is for the model to efficiently perform diverse computer vision tasks by following plain language directions. This strategy enables a single approach in the field of computer vision.

Experiments and their results for Instruct CV

InstructCV was tested on four different computer vision tasks in their experiments: semantic segmentation, object detection, monocular depth estimation, and image classification. For each challenge, they employed popular datasets: ADE20k for semantic segmentation, MS-COCO for object detection, NYUv2 for depth estimation, and Oxford-IIIT Pet for picture classification.

Semantic Segmentation: From the ADE20k dataset, they used 20,000 images for training, 2,000 for validation, and 3,000 for testing. For the training/test split, they used a typical approach. They averaged the outputs throughout inference to produce segmentation masks and evaluated the accuracy using Mean Intersection over Union (mIoU).

Detection of objects: The MS-COCO dataset included 118,000 training photos and 5,000 validation images labeled in 80 different categories. They divided the data into categories using a specified technique and processed the output photos to calculate the Mean Average Precision (mAP) for each.

Depth estimation was performed using the NYUv2 dataset, which included 24,231 training image-depth pairs and 654 testing images. During testing, they provided the Root Mean Square Error (RMSE), absolute mean relative error (A.Rel), and the percentage of inner pixels that exceeded a particular threshold.

Image Classification: Image classification was carried out by determining whether or not a particular category was clear in the supplied image. Following a specific template prompt, they evaluated InstructCV correctness by analyzing whether the output image had the proper category’s color block. This was done for binary classification on the Oxford-IIIT Pet dataset, and they calculated a classification score based on the Euclidean distance between the pixel-wise colors of the output image and the target color block stated in the task instruction.

Their training setup included 8 NVIDIA A100 GPUs running for 10 hours with images of 256×256 resolution. With a batch size of 128, researchers applied data augmentation techniques such as random horizontal flipping and cropping. They used a learning rate of 10-4 and no warm-up step to initialize the model with EMA weights from the Stable Diffusion checkpoint. The models trained on DI_FP and DI_RP were dubbed InstructCV-FP and InstructCV-RP, respectively.

Conclusion

In this paper, they present InstructCV, a single language-based system for dealing with a variety of computer vision problems. InstructCV eliminates the requirement for task-specific setups and instead executes tasks using natural language instructions. It approaches several computer vision challenges as problems of converting text to graphics. The text in this case provides directions, while the image that results represents the task’s output.

Following the InstructPix2Pix architecture they created a multitask and multi-modal dataset to train a pre-trained text-to-image diffusion model, transforming it from a generative model to an instruction-guided multi-task vision learner. Their model demonstrates its capacity to perform well even with new data, various categories, and user-generated instructions by employing meaningful language instructions to direct the learning process.

References

https://arxiv.org/pdf/2310.00390v1.pdf

https://github.com/AlaaLab/InstructCV

Similar Posts

-

UniRef++: A Unified Object Segmentation Model

-

Monkey: A model for text generation from high-resolution image

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

GLaMM: Text Generation from Image through Pixel Grounding Large Multimodal Model

-

RegionSpot: Identify and validate the object from images

-

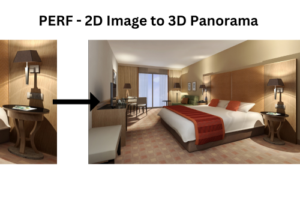

PERF: Nanyang Technological University Presents a Revolutionary Approach to 3D Experiences with Single Panoramas