LAMP is an innovation in text-to-video generation. This model is a new innovation in the field of Video generation. Ruiqi Wu, Liangyu Chen, Tong Yang, Chunle Guo, Chongyi Li, and Xiangyu Zhang from VCIP, CS, Nankai University, and MEGVII Technology are involved in this research study.

The latest developments in text-to-image have captured the public’s interest. Prior models frequently require a huge dataset of text-video pairs and extensive training resources. It isn’t easy to balance the freedom of creation and the resources necessary for video generation.

In this study, researchers provide LAMP (Learn A Motion Pattern) a few-shot tuning framework that allows a text-to-image diffusion model to pick up a Motion Pattern to form videos from them. This model is basically made for video generations from text to image model. This model produces high-quality videos and this model is freely creative.

.webp)

LAMP works well with a variety of motions. The created videos remain constant in time and near to the video indications. There are two advantages of LAMP first with the proposed first-frame-conditioned training technique, they can use powerful SD-XL for first-frame training. Which helps in the creation of detailed frames. The second, diffusion has good semantic generalization features. Since their tuning method, models are kept for example, forcing smile motion on unobserved comedic style.

Related Studies and Their Shortcomings

Text-to-Image Diffusion Models: Diffusion models outperform other techniques such as GANs, VAEs, and flow-based approaches. They have become popular due to their training and remarkable outcomes. GLIDE, for example, employs texts and classifier-free guidance to improve image quality. Rombach and others tried to reduce computing complexity using LDM, a method for compressing images into a lower-dimension space. Recently, SD-XL established itself by producing high-definition photos. In this work, they use SD-XL to generate the initial frame and adapt SD-v1.4 to predict subsequent frames.

Text-to-Video Diffusion Models: Diffusion-based models, which have proven to be successful in text-to-image generation, are also good in text-to-video production.

.webp)

Open-domain T2V generation and template-based methods are the two basic ways.

Open-domain T2V production, such as ImagenVideo and Make-A-Video. However, due to the high computing needs at the pixel level, all techniques have limitations in terms of video length and resolution. Sketches and motion vectors are among the new requirements introduced by VideoComposer. AnimateDiff allows T2I models to create videos with a uniform design. While these methods produce excellent findings, they typically necessitate training on large-scale datasets, which most researchers cannot afford.

Template-based approaches, such as Dreamix and GEN-1, try to make video-to-video translation easier by user prompts. They provide more creative control, their training expenses can be expensive. Tune-A-Video provides a one-shot option that uses a T2I model to reproduce the original video. By altering attention layers and injecting cross-attention maps, FateZero provides a training-free technique. By combining priors and conditional guidance, Rerender-A-Video and TokenFlow increase video consistency. This model’s few-shot T2V approach focuses on attaining greater freedom in video generation rather than carefully conforming with the motion pattern of video.

Fundamentals of LAMP

There are projects like T2V-Zero that change text-to-image diffusion models for video production without training. Transferring knowledge from text-to-image to text-to-video in a zero-shot approach remains difficult, which results in films that seem similar but lack motion.

They suggest a model to balance between training needs and freedom of expression while learning motion: few-shot text-to-video production. The goal is to train an already trained text-to-image diffusion model to recognize motion patterns in a small selection of films.

To handle these challenges: First, because there is minimal data, there is a risk of producing videos that resemble the training set, reducing creativity. Second, the basis operators of text-to-image diffusion models are essentially in nature and must be modified to record time-related data within movies.

Researchers’ LAMP solution handles the first issue with a first-frame-conditioned pipeline. The text-to-video task is divided into two sub-tasks in this approach: creating the initial frame with a pretrained text-to-image model and projecting frames with their specific video diffusion model. The first frame allows their model to concentrate on the motion pattern. To address the second issue, they created temporal-spatial motion learning layers that capture both timing and physical information at the same time.

They tested LAMP on many motion scenarios which gave good results. LAMP can generate films with similar motion patterns from the training set using minimum data on a single GPU and effectively to various genres and objects.

The model’s future potential lies in improving its capacity to handle complicated textual requests. Developing a large training dataset, covering numerous motion patterns, and increasing the model’s applicability. The future fields of this model also include real image animation and video editing.

Access and Availability of Research Papers and Code

The research paper on this model is available on Arxiv. The code of this model is also freely accessible on GitHub. These resources are open source anyone can use them according to their needs.

Potential Fields of LAMP

This model can help educational institutions by looking at their library resources and improving access to study materials. This can be used by the government to make sure that research findings are accessible to all. It additionally acts as a resource for the publishing industry, helping publishers.

LAMP includes a network that predicts upcoming frames based on the given first frame thanks to the training of the proposed first-frame conditioned pipeline. This allows real-world photos to be animated.T2I models created this data. As a result, the capacity to animate real-world photos using these images is placed in the, they will learn motion patterns.

When the offered training set only contains a single video clip, their approach can only learn a single motion rather than a motion pattern. In this scenario, their method basically becomes a video editing algorithm. The training procedure stays the same as in the few-shot setting.

Components and Functionality of the LAMP

They begin with some fundamental diffusion model concepts. These models work in steps, adding noise to generate samples. Utilizing diffusion models to generate high-resolution images becomes demanding. For this Latent diffusion models (LDMs) for text-to-image generation have been developed. These models use an autoencoder to operate in a lower-dimensional latent space to reduce redundancy and computational cost. To generate videos, they use LDMs in this model.

Few-shot-based T2V Generation Setting: Existing T2V algorithms need a huge quantity of training data, limiting their flexibility. They propose a few-shot T2V generation. A little video set and a motion-related cue are used. They want to make films with an identical motion pattern to the set of training images while ignoring content specifics by changing a text-to-image (T2I) model. Because of the small size of the data, this model is inexpensive.

LAMP learns a motion pattern from a tiny video set, allowing it to generate videos using the learned motion patterns. In video generation, this strategy creates a compromise between instructional resources and generating freedom. They decouple the contents and motions of a movie by transferring text-to-video generation to first-frame generation and subsequent-frame prediction.

They add noise and compute loss functions for all frames save the first during training. Furthermore, just the newly added layer parameters and the query linear layers of self-attention blocks are tuned. The first frame is generated by a T2I model during inference. The tuned model only works with user input to denoise the latent properties of the following frames.

First-Frame-Conditioned Pipeline: This phenomenon of the limited dataset’s content is a risk in few-shot T2V which can limit its freedom of creativity. The first frame condition is used to focus on motion. This makes the T2V work easier to handle by using the first frame of a movie as a condition and decoupling motion from the content. This enhances prompt alignment and generation. It is also adaptable in real-world applications such as image animation and video editing.

Adapt T2I Models to Video: They apply temporal-spatial motion learning layers to handle temporal features. These layers use 1D and 2D convolutions to capture both temporal and spatial information. The T2I model acts at the temporal level and develops associations between frames while creating a video. They also make changes to the attention layers for frame consistency.

Shared-noise Sampling During Inference: They use shared-noise sampling during inference to improve the quality of videos. They accomplish consistency in noise levels by collecting a shared noise sequence and changing the original noise. This method maintains the generation process. AdaIN and histogram matching are used to enhance the results.

Evaluating the Experimental Results of LAMP

They make 320×512 resolution videos with 16 frames. They balance costs by using SD-XL for initial frame production with SD-v1.4 for upcoming frames. Self-collected videos with random sampling for 16-frame comprise their training dataset. All frames are resized to 320×512 pixels. Using a learning rate of 3.0 x 10-5, they fine-tune the parameters of new layers and the query linear layer in self-attention blocks. Their studies run on a single A100 GPU, which requires about 15 GB of vRAM for training and 6 GB for inference.

They trained the LAMP model for eight different motion patterns, resulting in 48 evaluation movies. This model is compared to three public techniques: AnimateDiff, Tune-A-Video, and Text2Video-Zero.

They evaluate the model based on textual alignment, frame consistency, and generation. In a user study, this model achieves the greatest satisfaction rate among participants. The Qualitative Results include the advantages and disadvantages of the comparative methods. LAMP shines visually beautiful and relevant to the situation videos with suitable motion patterns.

Final Remarks about the LAMP Research Study

This work presents a novel setting for T2V generation called few-shot tuning, which learns a common motion pattern from a small video collection to accomplish a trade-off between generational freedom and training burden. The suggested LAMP serves as the foundation for this new environment. They use this way to convert the T2V work into T2I generation.

Predict the subsequent frames based on the initial frame. This prevents overfitting the dataset’s content during few-shot adjustments while using the benefits of converting text to images. T2V generation performance is improved. Extensive experiments show that our strategy is effective and can be generalized.

References

https://arxiv.org/pdf/2310.10769.pdf

Similar Posts

-

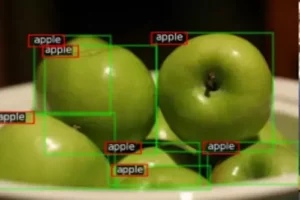

UniRef++: A Unified Object Segmentation Model

-

Monkey: A model for text generation from high-resolution image

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

GLaMM: Text Generation from Image through Pixel Grounding Large Multimodal Model

-

RegionSpot: Identify and validate the object from images

-

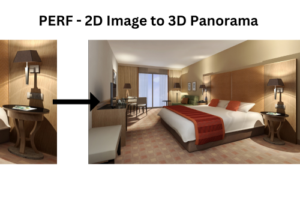

PERF: Nanyang Technological University Presents a Revolutionary Approach to 3D Experiences with Single Panoramas