Prepare to be amazed by the magic of Latent Consistency Models (LCMs). They’ve revolutionized the high-resolution image creation industry by providing not just jaw-dropping graphics but also quick turnaround times. LCMs streamline the procedure, making elegant, drawn-out image production a quick and thrilling experience. LCMs eliminate the need for countless tiny steps by performing their magic all at once, much like an artist would do while painting a masterpiece. Institute for Interdisciplinary Information Sciences and Tsinghua University are involved in this research model.

Although Latent Diffusion Models (LDMs) are excellent at producing high-resolution images, their intricate sampling procedure can make them slow. Latent Consistency Models (LCMs) were developed by researchers as a result of Consistency Models. When collaborating with any pre-trained LDMs, including Stable Diffusion, these LCMs accelerate and simplify the process.

Here’s the concept: LCMs forecast the answer in a single step, sort of like solving a unique problem, as opposed to creating an image in numerous small phases. As a result, image production is quick and of excellent quality. Even better, they can train a high-quality 768×768 image on an A100 in about 2 to 4 steps with only 32 hours of GPU time.

They continued after that and gave Latent Consistency Fine-tuning (LCF), another tool they have, that allows them to precisely adjust LCMs for unique image sets. On the LAION-5B-Aesthetics dataset, where they tested LCMs, they performed the best at converting text into images fast and simply.

Related work to the Latent Consistency Model

Diffusion Models, which have already had a significant impact on the field of image generation, can be used to produce stunning photographs. These models, developed to estimate data distribution and clean up noisy data, perform their magic during inference by gradually eliminating the noise from a data point using a reverse process.

Diffusion Models provide several unique benefits including training stability and superior probability estimates compared to other techniques like VAEs and GANs. One drawback is that they sometimes move slowly.

Don’t worry though; everyone has been working hard to move things along. They have devised numerous strategies, ranging from sophisticated mathematical procedures to expedited training methods. Even new models exist that can produce photos quickly.

Let’s discuss latent diffusion models (LDMs) now. They are the superhumans of text-to-high-resolution image conversion. These models, such as Stable Diffusion (SD), operate in a very effective manner.

The future of quick image production is consistency models (CMs). They employ a unique method to move swiftly from one stage of the procedure to the finished image. They can be independently used or trained using diffusion models that have already been developed.

Introduction to the Latent Consistency Model

Diffusion models are like creative superstars who produce excellent work across a variety of disciplines. They’re like painters who have produced amazing paintings. Latent diffusion models (LDMs), such as Stable Diffusion, are a particularly intriguing kind that can create incredibly detailed visuals from text descriptions. They accomplish this by gradually cleaning up their art, one step at a time.

There is a minor snag, though. They move quite slowly, much like an artist who takes an eternity to complete a painting. But clever people are attempting to speed things up. To speed up the creation of art, they employ mathematical magic like distillation or ODE solvers. It’s similar to discovering a shortcut to rapidly complete the painting.

Then there are consistency models, which are brand new. They’re similar to speed artists. They create art in a single step, and you don’t have to wait forever to view the finished product. The drawback is that they can only create pixel art, not high-resolution artwork.

This is when things become interesting. They devised Latent Consistency Models (LCMs). These are the highest form of art speedsters. They take the finest of LDMs and consistency models and use them to create high-resolution art in record time. They can even fine-tune them for unique projects, ensuring that you receive exactly what you want.

Consistency Models (CMs), as detailed by researchers, have been doing interesting things in the creation of images, particularly on ImageNet and LSUN datasets. But here’s the thing: they’ve largely been focusing on making smaller images look great.

Latent Consistency Models (LCMs) are similar to CMs, but they are supercharged to handle larger and more sophisticated image tasks, such as converting text into ultra-high-resolution images. As the foundation for LCMs, previously picked an outstanding model called Stable Diffusion (SD). To make the process of making these photographs as quick as possible, even in a few stages, without sacrificing quality.

Future Scope of Latent Consistency Models

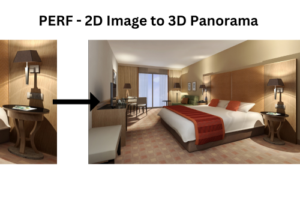

The data presented in this study lays the way for a number of exciting possibilities in the fields of generative models and text-to-image synthesis. One current area of investigation is the optimization of Latent Consistency Models (LCMs) to improve their speed and efficiency, perhaps allowing for even more accessible high-resolution image creation. LCM scalability could also be researched in order to push the limits of image resolution. Extending the scope to multi-modal tasks, such as text-to-video or text-to-3D model synthesis, opens up fascinating possibilities, particularly in the entertainment and virtual reality domains.

Applying LCM expertise to industries such as medical imaging and scientific data synthesis opens up new possibilities. Another intriguing area of research is the development of interpretable models for ethical issues, content moderation, and explainable AI. Reducing data dependency in LCMs would boost their adaptability and real-world applicability, allowing them to do tasks such as automatic content generation and dynamic scene generation.

Research paper and Code availability

The research paper on this model is available on Arxiv. The implementation code of LCM is also available and accessible on GitHub. The resources are open source and free for all public usage.

The Potential of Latent Consistency Models in Different Fields

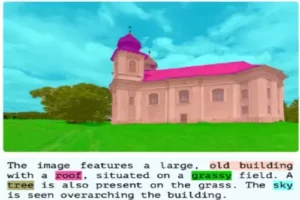

The data reported in this study has a wide range of potential applications with significant effects across multiple fields. One significant application is in content development and creative industries. Latent Consistency Models (LCMs) could be used by artists and designers to quickly generate high-resolution images based on textual descriptions, revolutionizing the process of creating visual content for art, advertising, and entertainment. This could usher in an era of automated and personalized content creation, allowing content creators to effectively realize their ideas while catering to unique audience preferences.

LCMs could be used to generate high-quality product photos for web-based catalogs in the worlds of e-commerce and fashion. This will simplify the process of introducing new products to customers, providing them with a more realistic and personalized buying experience. Furthermore, in the field of architecture and interior design, LCMs might generate precise visualizations based on architectural blueprints and descriptions, allowing architects and designers to more effectively display their concepts and ideas.

Educational applications look promising as well. LCMs could be used to develop interactive instructional resources, generate realistic simulations, and visualize complicated scientific concepts, hence improving learning experiences in a variety of areas.

LCMs could also be used in virtual reality and augmented reality to create lifelike virtual worlds, 3D models, and augmented reality material. This would be critical in creating immersive gaming experiences, training simulations, and location-based augmented reality apps.

Experiments and their results

The researchers team has used two subsets of the LAION-5B dataset, one with 12 million text-image pairs and the other with 650,000. They generate photos with resolutions of 512×512 and 768×768.

Stable Diffusion-V2.1-Base for 512 resolution and Stable Diffusion-V2.1 for 768 resolution are among the models in play. These models serve as “teachers” for LCMs. They train LCM for 100,000 iterations and rely on a variety of technological details to make it all work.

To see how well LCM performs, They compare it to other approaches such as DDIM, DPM, DPM++, and Guided-Distill, utilizing metrics like as FID and CLIP scores.

This is where they get more into the technical aspects of the situation. They experiment with different solvers, skip-step schedules, and guidance scales to see which ones perform best for LCM.

They are not content with just making visuals out of words. With Latent Consistency Fine-tuning (LCF), They are taking things a step further. On custom images datasets, they fine-tune LCM to evaluate how well it responds to different styles and purposes.

Conclusion

They introduce Latent Consistency Models (LCMs) as well as a very efficient one-stage guided distillation method that allows for few-step or even one-step inference on pre-trained LDMs. Furthermore, they provide latent consistency fine-tuning (LCF), which enables LCM inference in a few steps on customized image datasets. Extensive trials on the LAION-5B-Aesthetics dataset show that LCMs outperform and are more efficient. Their technology will be extended to other image production jobs in the future, such as text-guided image modification, in-painting, and super-resolution.

Reference

https://arxiv.org/pdf/2310.04378v1.pdf

https://github.com/luosiallen/latent-consistency-model

Similar Posts

-

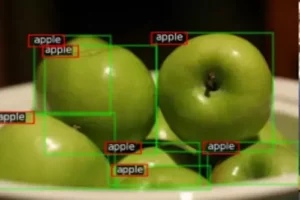

UniRef++: A Unified Object Segmentation Model

-

Monkey: A model for text generation from high-resolution image

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

GLaMM: Text Generation from Image through Pixel Grounding Large Multimodal Model

-

RegionSpot: Identify and validate the object from images

-

PERF: Nanyang Technological University Presents a Revolutionary Approach to 3D Experiences with Single Panoramas