LLEMMA, which is a model for mathematical brilliance, is revealed. LLEMMA is a work of genius. This model outperforms the competition and the unreleased Minerva model on the MATH benchmark. This model can solve any mathematical problem and also the theorems of maths.

Zhangir Azerbayev, Hailey Schoelkopf, Keiran Paster, Marco Dos Santos, Stephen McAleer, Albert Q. Jiang, Jia Deng, Stella Biderman, and Sean Welleck from Princeton University, EleutherAI, University of Toronto, Vector Institute, University of Cambridge, Carnegie Mellon University, and University of Washington are involved in the amazing model’s research work.

LLEMMA is a language model built for mathematics. This was developed after training Code Llama on a variety of publications, websites, and mathematical code. LLEMMA beats all open-source models, including the unpublished Minerva model. When they tested this model against the MATH benchmark with the same number of parameters LLEMMA exceeded this model also. LLEMMA can also execute mathematical activities and theorems without any fine-tuning.

Prior Related works and their Drawbacks

Recent developments in large language modeling have focused on two aspects: continual scalability of model size and training data quantity. There is a trend toward more adaptable models that can solve a wide range of issues and are quickly adjustable. The third component is making these language models widely available to the community.

Machine learning’s application of language models to mathematics challenges. It requires assessing mathematical knowledge and reasoning, getting more capable, and judging the quality of their answers and processes increases more difficult. Prior models emphasize fine-tuning to increase performance on specific mathematical tasks, but this work establishes a base model for applications.

There is a movement in mathematics to connect language models with proof helpers, such as proof generation, and auto formalization. This work shows few-shot proof auto formalization and prediction, providing a large amount of mathematics data as well as an open-access model.

Unveiling about LLEMMA

Language models trained on texts have wide language understanding and generating abilities, allowing them to be basic models for many applications. These models do well for open-ended discussion, domain-specific models, those directed to medical, finance, or science, can perform better in many areas.

They trained a domain-specific language model for mathematics in this project. Mathematics needs pattern matching with prior knowledge. Mathematical reasoning is an important AI model that succeeds in this domain and contributes to a variety of research topics, including reinforcement learning and algorithmic reasoning.

In contradiction to previous domain-specific mathematics models, promotes openness. They present LLEMMA to mathematics by continuous pretraining. This new model is available in 7 billion and 34 billion parameter variants, sets limits for publicly released mathematical models, and can solve mathematical problems utilizing computational tools.

They open-source the AlgebraicStack dataset and training data and code. This study broadens in dimension, availability, and analytical depth, and all research products are freely available.

Code and Research Study Material Availability

The research of this model is available on Arxiv. They made this model open source so the code of this model is also freely available on GitHub. The resources are free for everyone and can be accessible at any time.

Potential Fields of LLEMMA

A future area is looking into broader applications in mathematics teaching and research. They intend to address potentials like memorization and data quality which guarantee that LLEMMA’s performance is strong and reliable. They intend to broaden their research beyond mathematics.

LLEMMA’s few-shot mathematical problem-solving can be used in education to construct intelligent systems for tutoring that help students solve complex arithmetic problems. This can also provide personalized, customized learning experiences to students, improving their abilities in mathematics.

The formal theorem proving this model can help in the advancement of proof verification systems, to the creation of strong, trustworthy software and hardware verification tools. Researchers can reduce the risk of software flaws by computing the verification of formal mathematical proofs.

LLEMMA Architecture and Training Insights

This models are built for mathematics and are available in two sizes: 7 billion and 34 billion parameters. Data: They employed a variety of data sources, including scientific papers, web data, and mathematical code from computer languages. They also used publicly accessible resources for transparency.

improved mathematical capabilities.

Model training:

This model is based on Code Llama. They were trained using self-regressive language modeling. The 7 billion model was trained for a total of 200 billion tokens, while the 34 billion model was trained for a total of 50 billion tokens. The training was on GPUs, with concurrent techniques used to improve efficiency and reduce memory. They altered the RoPE base time before training LLEMMA 7B for further context fine-tuning. This was not possible for the LLEMMA 34B model due to processing constraints.

Experimental Evaluation of LLEMMA

Their goal is to examine this model as a basic model for mathematical understanding of text. They use few-shot evaluation approaches for comparisons. They are mostly interested in models that were not modified with supervised examples.

Mathematical Problem Solving using Chain of Thought:

Researchers assess the ability of the model to solve mathematical problems using a chain of thought. This is evaluated using MATH and GSM8k, which are used to evaluate quantitative reasoning.

Few-Shot Tool Use and Formal Theorem Proving: The second part of their testing, necessitates the use of computational tools and formal theorem proving. Proof-Pile-2 is a collection of 1.5 billion tokens that consists of data from Lean and Isabelle. They do the examination of this model in two specific tasks:

Informal-to-formal proving:

An informal LATEX statement and an informal LATEX proof are given to the model to generate a formal proof. The proof helper examines the formal proof. They analyze miniF2F a benchmark comprising of problem statements from Olympiads and undergraduate coursework, using the Isabelle. They use 11 examples for the prompt, 7 for number theory questions, and 6 for all others. Using greedy decoding, they obtain a single proof.

Formal-to-formal proving:

Developing a sequence of proof to prove a formal statement. The input at each step is provided by the proof helper, and the goal of this model is to construct a proof step. The proof assistant checks the proof step, which results in a new state or an error message. The method is repeated until proof is completed or a timeout is reached. Three samples are used to motivate the model. They analyze using the Lean 4 proof assistant and miniF2F.

Memorization and Data Mixture Research:

They also examine how data mixture affects the model and if the model remembers data from the training. The results suggest that improves few-shot mathematical problem-solving. It beats previous models such as Code Llama and Minerva. LLEMMA is flexible to a wide range of jobs.

LLEMMA in mathematical problem solving is significant. It improves performance on problems of difficulty and shows the model’s ability to solve mathematical problems. This model’s performance on formal mathematics problems is that it can construct formal proofs in formal theorem proving.

In terms of the impact of data combination and memorization, this model has the ability to develop solutions. A high overlap between test cases and training data does not always improve accuracy, particularly when dealing with difficult issues. The performance of LLEMMA demonstrates its value as a basic model for mathematical text understanding.

Final remarks

They present LLEMMA and Proof-Pile-2, a base model for mathematical language modeling. These models, datasets, and code are all freely accessible. They proved that this model produces state-of-the-art results for open-weight models on mathematical problem-solving benchmarks, that it can use external tools via Python code, and that it can foresee few-shot methods for theorem proving.

They hope that LLEMMA and Proof-Pile-2 will serve as a foundation for future work on model generalization and dataset composition, investigating domain-specific models, and using language models as tools for mathematicians.

References

https://arxiv.org/pdf/2310.10631.pdf

https://github.com/EleutherAI/math-lm

Similar Posts

-

LayoutPrompter: Automatic-layout generation using with Large Language Models

-

LOOGLE: Long-Context perceive extended phrases

-

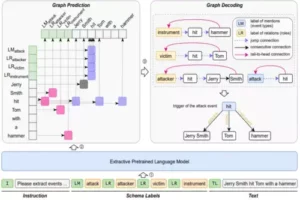

Mirror: An Ubiquitous Model for Information Extraction related Tasks

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

Microsoft Interns Revolutionize AI: LEMA the Error-Driven Learning

-

INT8 quantization: Large Language Models (LLMs) effects on CPU inference