A secret truth in a society is where language models hold enormous authority. These AI, trained on vast datasets, are hiding secrets inside their digital minds. Copyrighted content, private information, and more secrets. Weijia Shi, Anirudh Ajith, Mengzhou Xia, Yangsibo Huang, Daogao Liu, Terra Blevins, Danqi Chen, and Luke Zettlemoyer from the University of Washington and Princeton University are involved in this study.

In this study, they investigate issues with large language models (LLMs). These LLMs are frequently used, and the data used to train them is kept private. With the massive volume of data like trillions of words, it contains sensitive content such as copyrighted works, personal information, and results of tests.

To solve these limitations they offer MIN-K% PROB. This technique is based on a simple hypothesis: if a text sample was not seen during training, it is likely to include phrases with low probabilities. If a text sample has previously been used in training, it is less likely to contain terms with such low probability.

MIN-K% PROB, in particular, can be utilized without any prior knowledge of training procedures. This differentiates it from earlier detection, which required training reference models to the pretraining data of the LLM.

They apply MIN-K% PROB to three real-world scenarios, copyrighted book detection means finding extracts from copyrighted books of the dataset that may have been used as training material for GPT-3. Contaminated downstream example detection assessing the possible escaping of downstream task data into pretraining datasets and privacy auditing of machine unlearning model’s capacity to complete the story using questionable pieces of text.

Prior Related Studies and Their Limitations

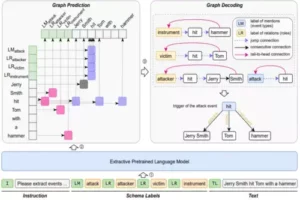

Membership Inference Attacks MIAs are used to determine whether a given data sample was part of training data. These attacks might compromise privacy and serious violations. MIAs are used to evaluate privacy risks in machine learning and confirm the efficacy of data-preserving. MIAs were first used with tabular and computer vision data, but have now been expanded to language problems. Their research focuses on using MIAs to detect pollution in pretraining data.

Prior research on datasets in language models regarded examples to be polluted if there were overlaps between training data and examples. These methods need the availability of retraining data, which can not be available for newer models. These strategies involve models to generate examples based on the name and split. These approaches are able to investigate contamination in closed-source models.

Basics about MIN-K% PROB

The overall amount of language model (LM) training has risen in recent years, which also includes developers such as GPT-4 and LLaMA 2 keeping their data hidden. This lack of openness raises questions regarding both model evaluation and social application. Private information such as copyrighted content and personal communication might get exposed. It could also include benchmark measurements unintentionally, making evaluating the effectiveness of these models difficult.

They address the pretraining data detection in this study, detecting whether text was part of an LM’s training data or not. They introduce the WIKIMIA benchmark and the MIN-K% PROB method. This issue is linked to Membership Inference Attacks (MIAs), which present unique challenges for LLMs because data is presented only once during pretraining. Furthermore, existing methods depend on reference models. Their datasets exhibit three properties: Accurate, General, and Dynamic.

.webp)

To assess whether a text is in the pretraining data of an LLM like GPT, MIN-K% PROB first computes the probability for each token in the text, then selects the k% tokens with the lowest probabilities and computes their average log-likelihood. The text is more likely in the pretraining data if the average log-likelihood is high.

To address these issues, they created WIKIMIA, a benchmark that tests detection algorithms for recently released pre-trained LLMs. This benchmark is created by combining old and new Wikipedia information, guaranteeing correctness, generality, and mobility. They present MIN-K% PROB, a reference-free MIA approach that beats existing methods on WIKIMIA by 7.4%.

They also demonstrate the value of MIN-K% PROB in practical applications such as copyrighted book detection, LLM privacy audits, and dataset pollution detection. These give information on the effect of pretraining decisions on detection difficulties.

Code and Research Paper availability

The Code and research paper of this model is open source. A research paper is available on Arxiv. The implementation code is available on GitHub. They are publicly available and can be accessed at any time.

Potential Feilds of MIN-K% PROB

Membership Inference Attacks (MIAs) emphasize the significance of MIA as a tool to evaluate privacy risks, with applications like detecting data leaks in machine learning models to assess the efficacy of data-preserving systems. It also highlights MIAs’ recent highlighting in pretraining data detection, an area that has gained study attention.

It describes numerous approaches for detecting examples and the difficulties of pollution in the absence of direct access to pretraining corpora. This includes research efforts targeted at improving the detection of contamination in language models and investigating model behavior when presented with specific data. The data’s information is useful for researchers working on language model privacy and security.

Data Detection Challenges

They are on a mission to reveal truths about large language models (LLMs). Determining if a text was part of an LLM’s training data.

Problem Definition and Obstacles:

They describe the problem and encounter challenges all through the journey. They are not simply looking for fine-tuned data; they are also looking into membership inference attacks (MIA). The goal is to create a detector that can tell whether text is part of an LLM’s training data even when we don’t have access to it. They face two challenges:

Challenge 1: The distribution of pretraining data is a secret. Prior methods assumed we could access an LLM’s training data, but the goal they have assumes the opposite, making it a realistic and serious obstacle.

Challenge 2: Data size, computational resources, and training settings all change between pretraining data. These distinctions have an important effect on the search for detection, which they prove both theoretically and practically.

.webp)

WIKIMIA: An Evaluation Tool

Their study is incomplete without a reference point which is WIKIMIA. This benchmark was created to assess methods for detecting pretraining data. They collect recent events from Wikipedia which is after 2023 and consider them as non-member data. They collect articles generated before 2017 and consider them as member data, as many pretraining models were released after that year. To ensure the quality of WIKIMIA, they carefully filter out unrelated Wikipedia pages.

They realize that LM users may require the detection of paraphrased and modified texts, so they present extra settings to evaluate the performance of MIA. They also acknowledge that data length detection challenges, so they suggest a variable-length setup. They partition Wikipedia data into different lengths and report the efficacy of WIKIMIA for each segment.

Experimentation and their Results

Using the WIKIMIA benchmark, they tested MIN-K% PROB against several language models, including LLaMA, GPT-Neo, and Pythia. Datasets and Metrics: They conducted experiments with various WIKIMIA dataset lengths (32, 64, 128, 256) using both original and paraphrased conditions. The True Positive Rate and False Positive Rate were used to assess results. Their primary measurements were the area under the ROC curve (AUC score) and TPR at low FPRs.

Baseline Detection Methods: They used known reference-based and reference-free methods such as the LOSS Attack (PPL), and Neighborhood Attack (Neighbor). Implementation and Results: MIN-K% PROB regularly beat all techniques in both original and paraphrased situations, reaching an AUC score of 0.72 on average, a 7.4% increase over the best baseline approach.

They investigated the elements that impact detection difficulty. Researchers discovered that model size had a beneficial relationship with detection performance, with larger models performing. Longer text samples were also simpler to detect because the model recalled more information.

books and contaminated downstream tasks.

DETECTING COPYRIGHTED BOOKS

MIN-K% PROB has the ability to detect likely copyright violations in training data. Researchers used MIN-K% PROB to find extracts from copyrighted books of the Pile dataset that may have been used as training material for GPT-3.

They developed a set for validation of 50 books known to be remembered by ChatGPT. They collected 50 new books with the first printings in 2023 that could not have been part of the training data as negative examples. A balanced verification set of 10,000 samples was produced. They utilized this data to get the best classification criterion using MIN-K% PROB.

They chose 100 books from the Books3 database that were known to have copyrighted content. They obtained 100 random 512-word pieces from the book, a test set of 10,000 extracts. They used a limit to figure out if these book excerpts were included in GPT-3’s pretraining data, then reported the proportion of these excerpts in each book. They used the best MIN-K% PROB threshold for the test set.

DETECTING DOWNSTREAM DATASET CONTAMINATION

Given the limited access to pretraining data, assessing the possible escaping of downstream task data into pretraining datasets is complicated. They look at how to use MIN-K% PROB to identify information leakage while carrying out ablation research in order to understand how training parameters affect detection difficulties.

They insert samples from downstream tasks into a pretraining database. The RedPajama corpus is used, and samples from BoolQ, IMDB, Truthful QA, and Commonsense QA are inserted into the clean text. The efficiency of MIN-K% PROB in detecting leaking benchmark cases will be evaluated using an LLaMA 7B model fine-tuned for a single period using tainted pretraining data.

In terms of finding leaking cases, MIN-K% PROB outperforms all approaches. The size of the dataset grows, and so do the AUC scores. This is consistent with results that large language models remember occasionally better.

A beneficial relationship exists between AUC scores and the regularity with which examples occur. Increasing the learning rate during pretraining improves AUC results. Higher learning rates result in better pretraining data memorization.

PRIVACY AUDITING OF MACHINE UNLEARNING

In connection with machine learning, confidentiality laws such as the GDPR and the CCPA order respect for an individual’s right to be forgotten. Machine unlearning is used to delete data from trained models. They evaluate the efficacy of unlearning on a model named LLaMA2-7B-WhoIsHarryPotter by story completion and question-answering tasks including Harry Potter.

MIN-K% PROB is used to detect suspicious text. Suspicious pieces are ones in which the unlearning process appears to have failed. The model’s capacity to complete the story using these questionable pieces is tested. They develop Harry Potter questions and apply MIN-K% PROB to identify questions with an unlearning failure. The unlearned model provides answers to these questions, they compared GPT-4 and Harry Potter in order to assess the effectiveness of unlearning.

The findings are that the unlearned model still generates highly comparable story completions, with certain portions closely approximating the original story. They observe ROUGE-L memory of 0.23 for selected questions in question answering, suggesting possible unlearning failure. The unlearned model accurately answers some Harry Potter-related questions, demonstrating that the knowledge has not been completely lost.

Final Remarks on MIN-K% PROB

They introduce WIKIMIA, a pre-training data identification dataset, and MIN-K% PROB, a new technique.

Their method is based on the idea that trained data has fewer tokens with very low probabilities than other baselines. In order to validate the effectiveness of their approach in a real-world situation, they conducted two case studies: detecting dataset pollution and detecting published books.

They see good results for dataset contamination that match theoretical assumptions about how detection difficulty increases with dataset size, frequency, and learning rate. Their book identification studies, give significant proof that GPT-3 models were trained on copyrighted publications.

References

https://github.com/swj0419/detect-pretrain-code

https://arxiv.org/pdf/2310.16789.pdf

Similar Posts

-

LayoutPrompter: Automatic-layout generation using with Large Language Models

-

LOOGLE: Long-Context perceive extended phrases

-

Mirror: An Ubiquitous Model for Information Extraction related Tasks

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

Microsoft Interns Revolutionize AI: LEMA the Error-Driven Learning

-

INT8 quantization: Large Language Models (LLMs) effects on CPU inference