Let’s explore the exceptional capability of OtterHD, transforming high-resolution images into text seamlessly, capturing every intricate detail without omission. This model proudly showcases its capacity to manage adaptable input dimensions, securing its versatility for a wide range of inference needs.

The AI researchers Bo Li, Peiyuan Zhang, Jingkang Yang, Yuanhan Zhang, Fanyi Pu and Ziwei Liu from S-Lab, Nanyang Technological University, Singapore presented this outstanding model.

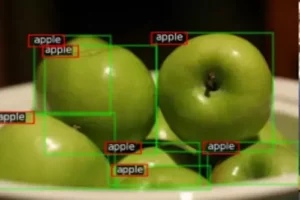

The OtterHD is an extremely resourceful multimodal model which is adapted from Fuyu-8B which is explicitly designed to interpret detailed visual inputs at high resolution with meticulous accuracy. Parallel to this model, an evaluation framework, MagnifierBench was also introduced. This framework is crafted to examine the capability of models in discerning intricate details and spatial relationships of small objects.

A high-resolution image is taken as an input along with the text to ask for required information. The model can adjust extensive range of resolution up to 1024*1024 pixels. The output is shown in the form of detailed text asked with the input images.

The growing effectiveness of extensive models concentrating on a singular modality such as vision models and language models has prompted a recent upswing in research investigating amalgamations of these models. The aim was to design a model that integrate different modalities into a unified structure such as Flamingo and Otter. Also, Otter is an advanced version of Flamingo.

Otter was designed to support multi-modal in-context instruction tuning based on the OpenFlamingo model. Otter was trained on MIMIC-IT dataset. In these models, capturing fine visual intricacies, especially those pertaining to smaller objects were not addressed within LLMs domain, recent models and benchmarks.

Lets Explore OtterHD

The latest model OtterHD, which is a fine-tuned with advanced instruction, is evolved from Fuyu-8B architecture. This model is open-sourced and explicitly designed to cater images with customize input resolution size which can be high up-to 1024*1024 pixels. Whereas, previous models does not support in taking input image with variable resolutions. MagnifierBench was also introduced, a distinctive benchmark that focus on judging the abilities of latest LLMs in identifying small details and relationships between objects within large images. The code is available on GitHub and research paper is also open source, available on Arxiv.

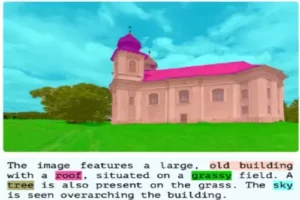

Did you play the game where we have to find and count the objects from the images? The model OtterHD also works like an engaging game in which it can count the objects and find out the relationship between those objects in a meaningful caption. Lets go through an example.

The input image with Query prompt is given to the OtterHD model. The model perceive and recognize the image by generating the caption that “The image is a traditional Chinese painting from the Song Dynasty, Along the River During the Qingming Festival.” This is a high-resolution input image with a resolution of about 2466×1766.

Dataset for OtterHD

370K responses/instructions were combined together from different public dataset such as LLaVA-Instruct, VQAv2, GQA, OKVQA, OCRVQA, A-OKVQA, COCO-GOI, COCO-Caption, TextQA, RefCOCO, COCOITM, ImageNet and LLaVA-RLHF. All of these datasets were organized into a response/instruction pairs to create uniformity in samples.

MagnifierBench as a Latest Benchmark

There was an immediate requirement for a benchmark that evaluates the capability of LMMs to distinguish small object details within high-resolution input images. The researchers introduced MagnifierBench to address this specific gap. The dataset used for this benchmark offers two answer formats that is multiple choice options, presented with a question along with multiple options for answers and freeform responses, creating a written explanation that matches the visual input.

The above examples showcasing the three question types within the MagnifierBench, each linked to two corresponding types of questions and their respective answers. The images are of high-resolution, central image with 640×480 pixels while left and right images has 1080×1920 pixels.

OtterHD performance was evaluated on newly proposed MagnifierBench and existing LMM benchmarks. The performance was analyzed on POPE, MM-Vet, MMBench, MathVista and newly developed MagnifierBench under the protocols of multiple-choice protocol and the free-form answering. The evaluation showed that while different models performed better on established benchmarks such as MME and POPE their performance declines on MagnifierBench showing the importance of holistic assessment of LLMs ability on details.

Comparison and Evaluation

OtterHD was qualitatively compared to Fuyu and LLaVA to check its performance. The result is shown below;

Wrap Up

The OtterHD which is expanding upon the groundbreaking structure of Fuyu-8B, processes images on different resolutions. It showed exceptional outcomes with high-resolution images. OtterHD showed outstanding results on MagnifierBench, showing more accurate results with higher resolution. This model showed versatility by handling a diverse array of tasks and resolution making it commendable for various multi-modal applications.

References

Similar Posts

-

UniRef++: A Unified Object Segmentation Model

-

Monkey: A model for text generation from high-resolution image

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

GLaMM: Text Generation from Image through Pixel Grounding Large Multimodal Model

-

RegionSpot: Identify and validate the object from images

-

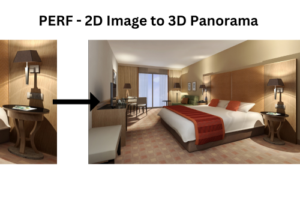

PERF: Nanyang Technological University Presents a Revolutionary Approach to 3D Experiences with Single Panoramas