Enter a world where language and visuals combine, modern technology faces a dangerous opponent – hallucination. Explore the never-ending work to make artificial intelligence more independent. Shukang Yin, Chaoyou Fu, Sirui Zhao, Tong Xu, Hao Wang, Dianbo Sui, Yunhang Shen, Ke Li, Xing Sun, and Enhong Chen from the School of Data Science, USTC & State Key Laboratory of Cognitive Intelligence, Tencent YouTu Lab are the researchers involved in this study.

In Multimodal Large Language Models (MLLMs), hallucination presents a serious challenge. It describes in which the text generated by the models in question does not align correctly with the content of supporting images. Current research depends on instruction-based fine-tuning for combating these hallucinations, needing training models with data.

They provide a solution named “Woodpecker“. Similar to the way a woodpecker repairs trees, their technique detects and corrects hallucinations in created text. The main concept extraction, question formulation, visual insight validation, visual claim production, and hallucination correction are the five separate steps of Woodpecker. Woodpecker implemented as a post-processing step, can easily applied to various MLLMs and provides visibility by allowing users to see intermediate outputs at each level.

Prior Related Work and Limitations

Recently, researchers have attention to an issue known as ‘hallucination’ in MLLMs. This is important since it impacts the reliability. People have been working on two major issues: first, figuring out evaluation/detection, and second, figuring out mitigation.

Some investigators have models to determine hallucinations. Other researchers examine the text whether it fits what it ought to mean based on the proper answers. Previously researchers have been working on hallucinations to improve the instruction and guarantee that the replies are not long.

Knowledge-augmented LLM: Some have attempted to enhance LLMs with external knowledge, like facts from databases. Their methods are similar in that they employ information related to the current image to correct any incorrect assertions. Applying this concept to image-text pairs is difficult. Some deal with correcting factual errors, while they are more worried about visual hallucinations. Researchers created a method for creating a well-organized visual knowledge base image and the query and dealing with various sorts of hallucinations.

LLM-Aided Visual Reasoning: Their method for LLM-Aided Visual Reasoning models, rely on LLMs’ reasoning and language abilities to help with visual problems. LLMs are used to extract crucial concepts, generate questions, and correct hallucinations.

Introduction about Woodpecker

Multimodal Large Language Models (MLLMs) are currently blooming in the scientific community, with the goal of achieving Artificial General Intelligence. These models connect Large Language Models (LLMs) to different sorts of data, such as photos and text. The objective is to provide MLLMs with extraordinary skills like understanding and characterizing visuals.

MLLMs, have many strengths and make blunders. They may describe visuals that do not match reality, which is known as ‘hallucination.’ Hallucinations are a barrier to making MLLMs operational.

When faced with hallucinations, previous research has concentrated on training models with instructions to prevent errors. One common issue arises when the generated text is excessively lengthy. Some systems limit the duration of the text in order to control hallucinations.

They adopt a unique approach with ‘Woodpecker.’ It is a training-free method that can directly fix hallucinations without retraining. When an MLLM provides a wrong description for an image, Woodpecker gets involved to correct it. It offers proof of why the repair is required, making the modifications easier. This increases both the openness and dependability of models.

Their strategy consists of five steps: Identifying concepts in the text, Inquiring about these concepts, Obtaining answers using models, Consolidating the questions and answers into a repository of knowledge, and Making adjustments based on this information.

.webp)

They tested Woodpecker on many datasets and discovered improvements. On the POPE benchmark, it increased MiniGPT-4/mPLUG-Owl accuracy from roughly 54-62% to around 85-86%.

In summary, their contributions are as follows: introducing Woodpecker, a training-free method for repairing hallucinations in MLLMs, developing a transparent process, and demonstrating its efficacy with results.

Code and Research Paper Accessibility

The code of this model is available on GitHub. The research paper is also open source and available on Arxiv.

Potential Fields of Woodpecker

It can greatly improve AI content development by minimizing hallucinations, guaranteeing that outputs from chatbots, content generators, or virtual assistants are accurate. The system may be used to perform automated verification in news articles, reports, or any AI-generated content, correcting factual flaws and increasing information quality.

In education, systems this model can be used to improve the quality of answers to students, ensuring accuracy and improving the learning experience. This model can be used in content for online platforms. Search engines like Google and many others can take advantage of the framework to give better and more reliable results.

This model can help the healthcare sector verify the correctness of medical information and guarantee that patients get trustworthy health-related advice. It is useful in information collection, to ensure that the material they deliver is accurate and free of hallucinations.

Methods used in Woodpecker

Their purpose is to identify and correct errors in MLLM. Identifying errors and organizing facts so that they can make corrections. This procedure is divided into five steps:

Key Concept Extraction: They begin by identifying the most essential points raised in the answer. This allows them to concentrate on what has gone wrong.

Question Formulation: Once they have determined what’s important, they ask questions about it. These questions assist them in identifying errors in the objects’ and their characteristics.

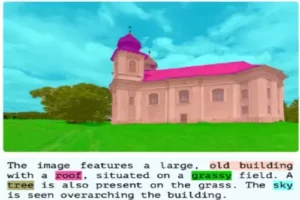

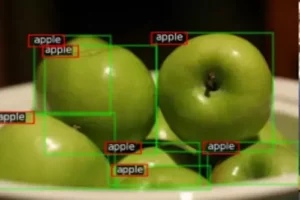

Visual Knowledge Validation: They utilize an object detector that is good at items in photos to answer questions about objects. They utilize a model that has been trained to answer questions about photos to answer.

MLLM returns the appropriate response. They acquire a visual knowledge base particular to the image and the original response after four steps: key idea extraction, question formulation, visual knowledge validation, and visual claim creation. The hallucinations in the response are corrected in the final phase using the bounding boxes as proof.

Visual Claim Generation: They get the answers to their questions, and then convert them into ‘claims,’ which are statements about what is in the image. This helps them check what is correct and incorrect.

Hallucination Correction: They get an MLLM to correct its faults in the answer. It adds boundary boxes to indicate fixed objects, making it easier to see what is being changed.

Experimentations and Results of Woodpecker

There are some experimental conditions and implementation details used in this study and also the achieved results in this model.

Experimental Conditions:

The studies have been carried out on three datasets: POPE, MME, and LLaVA-QA90, all of which were designed to assess hallucinations in MLLMs. Assessed object-existence hallucinations in three settings: random, popular, and antagonistic. Positive and negative samples were balanced at 50% each, with 50 images and 6 questions for each image. Across multiple splits, MME measured either object-level or attribute-level hallucinations. Scores were used as evaluation metrics. MLLMs were evaluated based on their answers to description-type queries.

Mainstream MLLMs such as mPLUG-Owl, LLaVA, MiniGPT-4, and Otter were employed, which rely on the ‘vision encoder-interface-language model’ design. Three pre-trained models were used in the corrective framework: GPT-3.5-turbo for key idea extraction, question formulation, and hallucination correction. Grounding DINO for open-set object detection; and BLIP-2-FlanT5XXL for VQA.

Evaluation and Results:

The results showed that MiniGPT-4 had inferior perceptual abilities in the random situation, whilst mPLUG-Owl and Otter were overconfident. Woodpecker increased accuracy, precision, recall, and F1-score metrics. MME results showed that Woodpecker was very effective in count-related hallucinations. It improved for attribute-level hallucinations. Woodpecker consistently raised proficiency and detailedness scores for MLLMs in this open-answer examination.

It was discovered that the open-set detector mostly improved object-level hallucinations but the VQA model effectively minimized attribute-level hallucinations.

Correction performance analysis revealed that Woodpecker’s accuracy rate is 79.2% while maintaining low omission and mis-correction rates. This demonstrated that the modification was effective without being overconfident. The findings show that Woodpecker is an effective paradigm for addressing hallucinations in MLLM responses across a wide range of evaluation datasets and contexts.

Conclusion

In this paper, they present the first correction-based paradigm for reducing hallucinations in MLLMs. Their methodology combined numerous off-the-shelf models and could be easily implemented into other MLLMs as a training-free strategy. To assess the efficacy of the proposed 8 Score system, they ran extensive experiments on three benchmarks in various circumstances, including GPT-4V for direct and automatic evaluation. They expect that their effort may generate fresh ideas for dealing with hallucinations in MLLMs.

References

https://github.com/BradyFU/Woodpecker

https://arxiv.org/pdf/2310.16045v1.pdf

Similar Posts

-

UniRef++: A Unified Object Segmentation Model

-

Monkey: A model for text generation from high-resolution image

-

TopicGPT: In-context Topic Generation using LLM (Large Language Model)

-

GLaMM: Text Generation from Image through Pixel Grounding Large Multimodal Model

-

RegionSpot: Identify and validate the object from images

-

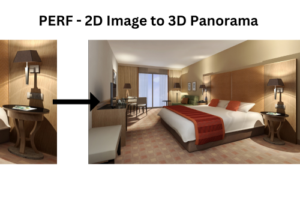

PERF: Nanyang Technological University Presents a Revolutionary Approach to 3D Experiences with Single Panoramas