THE UNITED STATES environmental protection agency prohibited its employees from using ChatGPT, but U.S. State Department personnel in Guinea used it to write speeches and social media posts.

Out of concern for the state’s cyber security, Maine banned its executive branch personnel from utilizing generative artificial intelligence for the remainder of the year. According to Josiah Raiche, the state’s head of artificial intelligence, government workers in neighboring Vermont are utilizing it to learn new programming languages and build internal-facing code.

San Jose, California, wrote 23 pages of generative AI standards and requires city workers to fill out a form every time they use a tool like ChatGPT, Bard, or Midjourney. Less than an hour north, the administration of Alameda County has hosted workshops to educate personnel about the risks of generative AI, such as its capacity for spitting forth convincing but false information, but does not see the need for a formal policy.

“We’re more concerned with what you can do than what you can’t,” says Sybil Gurney, assistant chief information officer for Alameda County. County employees are “doing a lot of their written work using ChatGPT,” according to Gurney, and have utilized Salesforce’s Einstein GPT to duplicate users for IT system assessments.

Governments are looking for ways to use generative AI at all levels. State and city officials told WIRED that they believe the technology can ease some of the most frustrating aspects of bureaucracy by reducing regular paperwork and enhancing the public’s ability to locate and understand complex government information. However, governments meet obstacles that are unique from those faced by the private sector, such as strong transparency requirements, elections, and a sense of civic responsibility.

“Everyone cares about accountability, but when you’re literally the government, it’s taken to a different level,” says Jim Loter, interim chief technology officer for the city of Seattle, which announced preliminary generative AI rules for its staff in April.

That level of monitoring of government personnel will almost certainly continue. The cities of San Jose and Seattle, as well as the state of Washington, have all advised staff in their generative AI policies that any information entered as a prompt into a generative AI tool instantly becomes open to disclosure under public record laws.

That data is also automatically fed into corporate databases used to train generative AI tools, and it may be spewed back out to another person using a model built on the same data set. Indeed, a big study published last November by the Stanford Institute for Human-Centered Artificial Intelligence reveals that the more accurate large language models are, the more likely they are to repeat full blocks of text from their training.

According to Albert Gehami, San Jose’s privacy officer, rules in his city and others may change dramatically in the coming months as use cases become clearer and public workers learn how generative Artificial Intelligence differs from currently prevalent technologies.

Using generative Artificial Intelligence to develop a paper for the general population is not specifically forbidden under San Jose’s criteria, but it is considered “high risk” because of the technology’s potential for introducing false information and because the city is particular about how it communicates. For example, a huge language model might use the word “citizens” to represent people who live in San Jose, whereas the city only uses the word “residents” in its communications. since not everyone in the city is a citizen of the United States.

Civic technology firms such as Zencity have added generative Artificial Intelligence tools for writing government press releases to their product lines, while tech behemoths and major consultancies such as Microsoft, Google, Deloitte, and Accenture are pitching a range of generative Artificial Intelligence products at the federal level.

Similar Posts

-

Mistral AI Revolutionary LLM: MoE8*7B Via Torrent Links

-

Stability AI has launched Stable Video Diffusion: A text-to-video Platform

-

PIKA: An Idea-to-Video Platform

-

Microsoft to Hire OpenAI Co-founder Sam Altman for New AI Research Team On 20th NOV

-

DeepMind’s Improved AlphaFold Model Helping in Drug Discovery

-

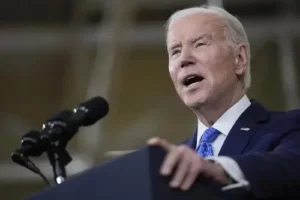

President Biden Takes a Risk by Signing an AI Executive Order